Module 9 - T201

T201: How to Write a Test Plan

HTML of the Student Supplement

(Note: This document has been converted from the Student Supplement to 508-compliant HTML. The formatting has been adjusted for 508 compliance, but all the original text content is included, plus additional text descriptions for the images, photos and/or diagrams have been provided below.)

(Extended Text Description: Large graphic cover page with dark blue background with the title in white letters “T201 How to Write a Test Plan.” At the middle left is the “Standards ITS Training” logo with a white box and letters in blue and green. The words “Student Supplement” and “RITA Intelligent Transportation Systems Joint Program Office” in white lettering are directly underneath the logo. Three light blue lines move diagonally across the middle of the blue background.)

T201: How to Write a Test Plan

Table of Contents

Introduction - 2

abbr - 2

Terminology/glossary - 2

References - 3

Systems engineering diagram - 4

TEST PLAN FROM SAMPLE PROJECT - 6

Test Plan Identifier - 6

Introduction - 6

Items to be Tested - 7

Features to be Tested - 8

Features not to be Tested - 8

Approach - 8

Item Pass/Fail Criteria - 8

Suspension Criteria and Resumption Requirements - 8

Test Deliverables - 8

Testing Tasks - 9

Environmental Needs - 9

Responsibilities - 11

Staffing and Training Needs - 11

Schedule - 11

Risks and Contingencies - 12

Approvals - 12

PORTION OF A PROTOCOL REQUIREMENTS LIST (PRL) FROM A SAMPLE PROJECT - 13

PORTION OF THE REQUIREMENTS TO TEST CASE TRACEABILITY MATRIX FROM A SAMPLE PROJECT - 20

Introduction

This guide is intended to serve as a supplement to the online training module T201: How to Write a Test Plan.

Background

The FHWA has developed a series of training modules to guide users on the use of ITS standards.

The T201 module is intended for engineers, operational staff, maintenance staff, and test personnel. It is intended to follow T101: Introduction to ITS Standards and to precede T202: An Overview of Test Design Specifications, Test Cases, and Test Procedures.

Purpose of this supplement

The modules are designed to be brief courses; the supplement is designed to provide additional information to the participant about various topics covered by the course.

abbr

PRL - Protocol Requirements List - this is a table that identifies each user need and requirement defined within a standard along with an indication of whether each item is mandatory or optional for conformance. This is included in all standards that include Systems Engineering Process (SEP) content.

Terminology/glossary

Testing phases

Design approval testing - The approval of a design based on review and inspection of drawings and specifications.

Prototype testing - Testing on a mock-up of a product. A prototype is an early version of a product in order to demonstrate the concept, but numerous features of the final product may not be present in a prototype.

Factory acceptance testing - Testing performed at the manufacturer's factory prior to shipping to the site. Testing at this point can be useful, especially when test failures are likely to result in additional work on the product that is most efficiently performed when at the factory.

Incoming device testing - Testing on a product when it is received by the agency, typically in a maintenance yard or other facility prior to being deployed in its final location.

Site acceptance testing - Testing on a product once it is installed in its final location.

Burn-in and observation testing - This is testing once the product has been installed, integrated with the system and made operational in a live environment.

Roles

Engineering staff - Engineering staff include those professionals who are responsible for designing and building the system in addition to ensuring that the project stays on schedule.

Operational staff - Operational staff includes those that are responsible for the day-today operation of the system.

Maintenance staff - Maintenance staff include those that are responsible for keeping the system operational by performing preventive and reactionary maintenance on the equipment.

Testing staff - Testing staff include those who are responsible for ensuring that the delivered system meets specifications.

Test documents (per IEEE 829)

Test case specification - A document specifying inputs, predicted results, and a set of execution conditions for a test item.

Test design specification - A document specifying the details of a test approach for a software feature or combination of software features and identifying the associated tests.

Test plan - A document describing the scope, approach resources, and schedule of intended testing activities. It identifies test items, the features to be tested, the testing tasks, who will do each task, and any risks requiring additional contingency planning.

Test procedure specification - A document specifying a sequence of actions for the execution of a test.

Types of testing

Validation - A quality assurance process to ensure that a product fulfills its intended purpose.

Verification - A quality control process that determines if a product fulfills its stated requirements.

References

Standards Website: http://www.standards.its.dot.gov/ - includes a variety of information about ITS standards and the systems engineering process.

NTCIP Website: http://www.ntcip.org - includes links to NTCIP standards, guides and other relevant information to the NTCIP standards.

IEEE Website: http://www.ieee.org - includes information about IEEE standards.

IEEE 829 - IEEE Standard for Software Test Documentation

NTCIP 9001 - NTCIP Guide

NTCIP 9012 - NTCIP Testing Guide for Users

FHWA Systems Engineering Website: https://www.fhwa.dot.gov/cadiv/segb/

FHWA Systems Engineering Guide: http://ops.fhwa.dot.gov/publications/seitsguide/seguide.pdf

Systems Engineering for ITS Workshop: https://www.arc-it.net/

Systems engineering diagram

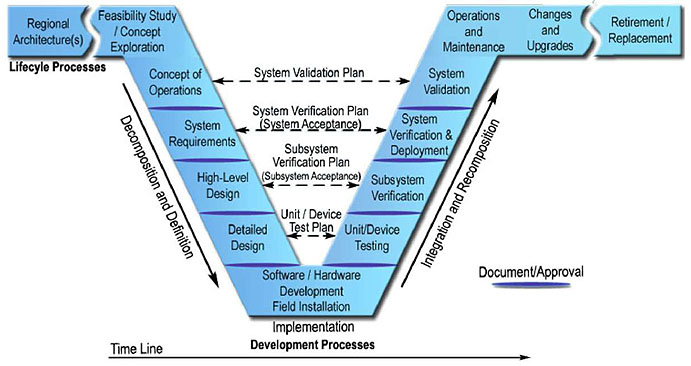

The "V" diagram used by the ITS industry, as shown in Figure 1, reflects a customization of the more general systems engineering process (SEP), but follows widely accepted guidelines.

Figure 1: Systems Engineering "V" Diagram for ITS

(Extended Text Description: Systems Engineering “V” Diagram for ITS. A graphic of the systems engineering process (SEP). The main graphic of the SEP is a V-shaped diagram in gradated blue with some additional horizontal extensions on the left and right side of the top of the V shape. Each section is separated with dark blue lines. There is a key at the lower right showing the blue separator lines, and designating them as “Document/Approval.” The left horizontal extension is labeled as “Lifecycle Processes” and include the sections “Regional Architecture” (separated by a curving white space) to the second section labeled “Feasibility Study / Concept Exploration” leading down into the diagonal portion of the V diagram with “Concept of Operations,” (blue line) “System Requirements,” (blue line) “High-level Design,” (blue line) “Detailed Design,” and “Software / Hardware Development Field Installation” at the bottom juncture of the V shape. Underneath the bottom point of the V shape are the words “Implementation” then “Development Processes” and a long thin arrow pointing to the right labeled “Time Line.” There is a long thin diagonal arrow pointing down along the left side of the V labeled “Decomposition and Definition.” From the bottom point of the V, the sections begin to ascend up the right side of the V with “Unit / Device Testing,” (blue line) “Subsystem Verification,” (blue line) “System Verification & Deployment,” (blue line) “System Validation,” and “Operations and Maintenance.” There is a long thin arrow pointing up along the right side of the V shaped labeled “Integration and Recomposition.” At this point the sections on the right “wing” of the V are labeled with “Changes and Upgrades” and (curving white space) “Retirement / Replacement.” Between the V shape there are a series of gray dashed arrows connecting the related sections on each left/right side of the V shape. The first arrow (top) is labeled “System Validation Plan” and connects “Concept of Operations” on the left and “System Validation” on the right. The second arrow down is labeled “System Verification Plan (System Acceptance)” and connects “System Requirements” on the left and “System Verification & Deployment” on the right. The third arrow down is labeled “Subsystem Verification Plan (Subsystem Acceptance)” and connects “High-Level Design” on the left and “Subsystem Verification” on the right. The last arrow (at the bottom) is labeled “Unit / Device Test Plan” and connects “Detailed Design” on the left and “Unit / Device Testing” on the right.)

The "V" starts with identifying the portion of the regional ITS architecture that is related to the project. Other artifacts of the planning and programming processes that are relevant to the project are collected and used as a starting point for project development. This is the first step in defining an ITS project.

Next, the agency develops a business case for the project. Technical, economic, and political feasibility is assessed; benefits and costs are estimated; and key risks are identified. Alternative concepts for meeting the project's purpose and need are explored, and the superior concept is selected and justified using trade study techniques. Once funding is available the project starts with the next step.

The "V" then begins to dip downwards to indicate an increased level of detail. The project stakeholders reach a shared understanding of the system to be developed and how it will be operated and maintained. The Concept of Operations is documented to provide a foundation for more detailed analyses that will follow. It will be the basis for the system requirements that are developed in the next step.

At the system requirements stage the stakeholder needs identified in the Concept of Operations are reviewed, analyzed, and transformed into verifiable requirements that define what the system will do but not how the system will do it. Working closely with stakeholders, the requirements are elicited, analyzed, validated, documented, and version controlled.

The system design is created based on the system requirements including a high-level design that defines the overall framework for the system. Subsystems of the system are identified and decomposed further into components. Requirements are allocated to the system components, and interfaces are specified in detail. Detailed specifications are created for the hardware and software components to be developed, and final product selections are made for off-the-shelf components.

Hardware and software solutions are created for the components identified in the system design. Part of the solution may require custom hardware and/or software development, and part may be implemented with off-the-shelf items, modified as needed to meet the design specifications. The components are tested and delivered ready for integration and installation.

Once the software and hardware components have been developed, the "V" changes direction to an upward slope. The various components are individually verified and then integrated to produce higher-level assemblies or subsystems. These assemblies are also individually verified before being integrated with others to produce yet larger assemblies, until the complete system has been integrated and verified.

Eventually, the system is installed in the operational environment and transferred from the project development team to the organization that will own and operate it. The transfer also includes support equipment, documentation, operator training, and other enabling products that support ongoing system operation and maintenance. Acceptance tests are conducted to confirm that the system performs as intended in the operational environment. A transition period and warranty ease the transition to full system operation.

After the ITS system has passed system verification and is installed in the operational environment, the system owner/operator, whether the state DOT, a regional agency, or another entity, runs its own set of tests to make sure (i.e., validate) that the deployed system meets the original needs identified in the Concept of Operations.

Once the customer has approved the ITS system, the system operates in its typical steady state. System maintenance is routinely performed and performance measures are monitored. As issues, suggested improvements, and technology upgrades are identified, they are documented, considered for addition to the system baseline, and incorporated as funds become available. An abbreviated version of the systems engineering process is used to evaluate and implement each change. This occurs for each change or upgrade until the ITS system reaches the end of its operational life.

Finally, operation of the ITS system is periodically assessed to determine its efficiency. If the cost to operate and maintain the system exceeds the cost to develop a new ITS system, the existing system becomes a candidate for replacement. A system retirement plan will be generated to retire the existing system gracefully.

To find more information on the systems engineering process, see the links provided in the References section of this supplement.

Test Plan from Sample Project

Test plan identifier

TP-C-DMS2-1

Introduction

Objectives

This test plan has been developed to define the process that the Agency will use in its incoming device testing to ensure that the DMS provided by the manufacturer fulfills all project requirements related to NTCIP 1203.

Project background

This project is a joint venture between Virginia Department of Transportation (VDOT) and Virginia Tech Transportation Institute (VTTI) to act as a test case for the NTCIP Dynamic Message Sign (DMS) 1203 Version 2.25 standard. The goal of the project is for VDOT and VTTI to use the more user friendly Specification Guide to write a specification and procure a message sign and controller as well as a separate third part central software package. Once procured, VDOT and VTTI will develop test procedures and then perform the tests on each sign as well as test how the sign(s) and software interoperate. The goal of this project is to investigate the process more than the final product.

The name of the project is: Phase A and B of Rapid Deployment and Testing of the Updated DMS Standards.

This test plan covers a full test of the NTCIP 1203-related requirements for the DMS. This includes requirements related to:

- Data formats (e.g., the encoding of data over the communications channel),

- Data exchange procedures (e.g., the proper sequencing of data), and

- Related end-user functionality (e.g., ensuring that the sign blanks when commanded to do so via the NTCIP interface).

Other test plans may supplement this test plan by performing a sub-set of tests (e.g., to spot-check performance of the same sign using a different communications profile or to spot-check subsequent signs delivered under the same contract).

References

Test Procedures for the Virginia Early Deployment of NTCIP DMSv2 (Version 01.03), FHWA, February 21, 2006.

See Appendix A for additional project-specific references

Definitions

The following terms shall apply within the scope of this test plan.

Agency Project Manager - Person designated by the organization purchasing the equipment to be responsible for overseeing the successful deployment of the equipment.

Completed PRL - A PRL that has been completely filled out for a given project.

Component - One of the parts that make up a system. A component may be hardware or software and may be subdivided into other components. (from IEEE 610.12) Within this document, the term shall mean either a DMS or a management station.

DMS - A Dynamic Message Sign - The sign display, controller, cabinet, and other associated field equipment.

DMS Test Procedures - Test Procedures for the Virginia Early Deployment of NTCIP DMSv2.

Developer - The organization providing the equipment to be tested. In the case of integration testing, there may be multiple developers.

Final Completed PRL - A Completed PRL that reflects all revisions that have been accepted to date for the given project.

PRL - Protocol Requirements List - A matrix linking all standardized user needs to standardized functional requirements, an indication of the conformance requirements for each user need and functional requirement, and an area to select the requirement and/or add project-specific limitations to the requirement.

System - The combination of the DMS and the management station with associated communications infrastructure.

Test Analyst - The person who performs the testing according to the test procedures and interprets and records the results.

Test Manager - The designated point-of-contact for the entire test team. Frequently, this is the same person as the Test Analyst.

Items to be tested

This test plan will test the NTCIP-related operation of a DMS. The version and revision of the equipment to be tested shall be recorded on the Test Item Transmittal.

The following documents will provide the basis for defining correct operation with the document of highest precedent listed last:

- Virginia Tech RFP # 655616

- Addendum #1 to RFP #655616

- Virginia Tech Purchase Order PO0601509

NOTE: The above documents reference specific versions of NTCIP standards that are the originating source of many requirements to be tested. Correct operations are defined by the specific versions of the standards as referenced in the above documentation (unless explicitly overridden by other documents); the specific version numbers are not listed in this test plan in order to avoid the introduction of any inconsistency.

Features to be tested

All requirements selected in the NTCIP 1203 Final Completed Protocol Requirements List (PRL) shall be tested.

Features not to be tested

Features that are not defined in NTCIP 1203 are not directly covered by this test plan. These features typically include, but are not limited to:

- Lower layer communication protocol details;

- Environmental operating requirements;

- Construction and material requirements;

- Power anomaly requirements;

- Performance requirements; and

- Security requirements.

While some aspects of these features may be tested (e.g., all NTCIP 1203 communications rely upon the basic operation of lower layer protocols, tests may include verification of those performance requirements defined in NTCIP 1203, etc.), this test plan does not focus on these types of requirements because they are not the focus of NTCIP 1203.

Approach

The Test Analyst will perform each selected test case from the DMS Test Procedures. A test case shall be deemed to be selected if it traces from a requirement selected in the Final Completed PRL and the requirement is referenced under the "Features to be tested" section of this test plan. The tracing of requirements to test cases is provided in Section 2.2 of the DMS Test Procedures.

Item pass/fail criteria

In order to pass the test, the DMS shall pass all test procedures included in this test plan without demonstrating any characteristic that fails to meet project requirements.

Suspension criteria, and resumption requirements

The test may be suspended at the convenience of test personnel between the performances of any two test procedures. The test shall always resume at the start of a selected test procedure.

If any modifications are made to the DMS, a complete regression test may be required in order to pass this test plan.

Test deliverables

The Test Manager will ensure that the following documents are developed and entered into the configuration management system upon their completion:

- The NTCIP 1203 Test Plan (this document, including Appendix A)

-

The specific versions of all documents referenced by these documents, including, but not limited to:

- DMS Test Procedures

- NTCIP 1203

- NTCIP 1102

- NTCIP 1103

- NTCIP 1201

- The Test Log, an example format is provided in Annex C

- The Test Summary, an example format is provided in Annex D

- Any and all Test Incident Reports, an example format is provided in Annex E

All test documentation will be made available to both the Agency and the Developer.

All test documentation will be made available in a widely recognized computer file format such as Microsoft Word or Adobe Acrobat. In addition, the files from the test software shall be provided in their native file format as defined by the test software.

Testing tasks

Table A-1: Testing Tasks

|

Task Number |

Task Name |

Predecessor |

Responsibility |

NTCIP Knowledge Level (low =1 to high = 5) |

|---|---|---|---|---|

|

1 |

Finalize Test Plan |

Finalize Completed PRL |

Test Manager |

2 |

|

2 |

Complete the Test Item Transmittal Form and transmit the component to the Test Group |

Implement DMS Standard |

Developer |

1 |

|

3 |

Perform Tests and produce Test Log and Test Incident Reports |

2 |

Test Analyst |

5 |

|

4 |

Resolve Test Incident Reports |

3 |

Developer, Test Manager |

2 |

|

5 |

Repeat Steps 2-4 until all test procedures have succeeded |

4 |

N/A |

N/A |

|

6 |

Prepare the Test Summary report |

5 |

Test Analyst |

2 |

|

7 |

Transmit all test documentation to the Agency Project Manager |

6 |

Test Manager |

1 |

Environmental needs

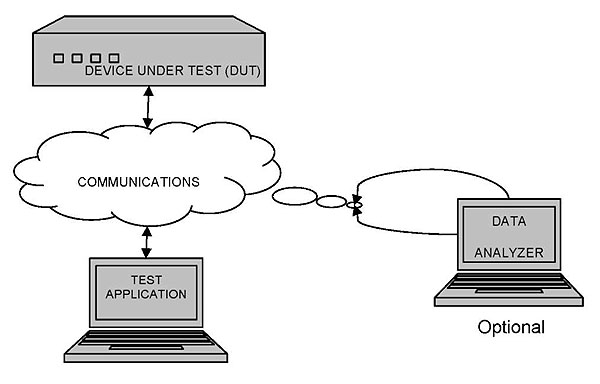

All Test Cases covered by this test plan require the device under test to be connected to a test application as depicted in Figure A-1. A data analyzer may also be used to capture the data exchanged between the two components. The test environment should be designed to minimize any complicating factors that may result in anomalies unrelated to the specific test case. Failure to isolate such variables in the test environment may result in false results to the test. For example, the device may be conformant with the standard, but communication delays could result in timeouts and be misinterpreted as failures.

Figure A-1: Field Device Test Environment

(Extended Text Description: Graphic illustration entitled "Figure A-1: Field Device Test Environment." This black and white illustrative graphic has a three-dimensional, long, narrow rectangular box at the top, with four small squares to the left side on the front face of the rectangular box. The words "Device Under Test (DUT)" are placed below and slightly to the right of the four small squares. There is a short bi-directional arrow pointing up to the rectangular box and down to a white cloud marked "Communications" in the center of the cloud image. There is a second short bi-directional arrow below the cloud pointing up to the cloud and down to an image of an open laptop computer. The words "Test Application" are in the center of the screen on the illustrated laptop computer. To the right of the cloud, there are three oval bubbles, descending in size from left to right, leading slightly down to another graphical laptop image to the lower right of the entire graphic. This second laptop has the words "Data Analyzer" on its screen. The word "Optional" appears below this second graphical laptop image. There are two curving uni-directional arrows, one from the top of the second laptop screen and the second from the left side. Both arrows point to the third, smallest oval bubble descending from the "Communications" cloud.)

The specific test software and data analyzer to be used are identified in the Tools clause of the Approach section of this test plan.

The tests will be performed at the Virginia Tech Transportation Institute. This location will provide the following:

- Access to power outlets for the test equipment; and

- Workspace for the Test Analyst that is protected from the elements.

Tools

The following software will be used for the testing:

- NTESTER Version 2.0

- FTS for NTCIP

Communications environment

All tests shall be performed using the following communications environment, unless otherwise defined in the specific test procedure.

Connection Type: RJ-45 Ethernet

Subnet Profile: NTCIP 2104 - Ethernet

Transport Profile: NTCIP 2202 - Internet

Read Community Name: public

Write Community Name: administrator Timeout Value: 200 ms

Responsibilities

The following roles are defined in this test plan:

-

Agency Project Manager - The Agency Project Manager shall be responsible for:

- Approving the Test Plan;

- Working with the Test Manager to address any concerns (e.g., balancing the desire for a perfect implementation against the political pressure to finish the project);

- Providing the test environment;

- Witnessing the performance of the tests;

- Receiving and checking the test results; and

- Final acceptance of the component.

-

Test Analyst - The Test Analyst shall be responsible for:

- Designing any custom test procedures;

- Preparing the test environment; and

- Executing the tests according to the test plan.

-

Test Manager - The Test Manager shall be responsible for:

- Managing the overall testing process and the test personnel;

- Finalizing the test plan;

- Providing the test tools; and

- Checking the test results.

-

Developer - The Developer shall be responsible for:

- Providing the test items with their associated transmittal reports;

- Ensuring that the test personnel are able to properly connect the equipment;

- (Optionally) witnessing the performance of the tests;

- Checking the test results; and

- Resolving any areas of non-conformance identified.

Staffing and training needs

The following staffing is expected for this test plan:

- Agency Project Manager - 1

- Test Manager - 1

- Developer - 1

If the Agency Project Manager is not familiar with NTCIP Testing, s/he should become familiar with NTCIP 9012 and FHWA guidance on the procurement of ITS systems. The Test Manager and Test Analyst must be familiar with how to use the test software. Many software systems come with extensive on-line help, but the test personnel may also need detailed knowledge of the NTCIP standards to fully perform their duties.

Schedule

Testing will commence within 4 weeks of the receipt of the hardware from the manufacturer. The testing is expected to take one week of on-site time followed by one additional week of work to prepare the report.

Our initial plan is to allow for three rounds of testing with each round of testing separated by six weeks to allow for the developer to correct the discovered defects.

Risks and contingencies

If the sign repeatedly fails the testing procedures it may be returned to the manufacturer for repair. The decision to return the sign is at the discretion of the project committee. The repaired sign will be retested prior to acceptance.

The Developer of the DMS shall correct any problems identified with the DMS. Upon completion of the modifications, the Developer shall re-submit the component for another complete test consisting of all test cases.

Approvals

_________________ ____

Test Manager Date

_________________ ____

Agency Project Manager Date

_________________ ____

Developer Date

Portion of a PRL from a Sample Project

|

User Need ID |

User Need |

FR ID |

Functional Requirement |

Conformance |

Support |

Additional Specifications |

|---|---|---|---|---|---|---|

2.1.2 |

DMS Characteristics |

M |

Yes |

|||

2.1.2.1 |

DMS Type |

M |

Yes |

|||

2.1.2.1.1 (BOS) |

BOS |

0.1 (1) |

Yes / No |

|||

2.1.2.1.2 (CMS) |

CMS |

0.1 (1) |

Yes / No |

|||

2.1.2.1.3 (VMS) |

VMS |

0.1 (1) |

Yes / No |

|||

2.1.2.2 |

DMS Technology |

M |

Yes |

|||

2.1.2.2.1 (Fiber) |

Fiber |

0 |

Yes / No |

|||

2.1.2.2.2 (LED) |

LED |

0 |

Yes / No |

|||

2.1.2.2.3 (Flip/Shutter) |

Flip/Shutter |

0 |

Yes / No |

|||

2.1.2.2.4 (Lamp) |

Lamp |

0 |

Yes / No |

|||

2.1.2.2.5 (Drum) |

Drum |

0 |

Yes / No |

|||

2.1.2.3 |

Physical Configuration of Sign |

M |

Yes |

The DMS shall be 2100 millimeters wide and 7950 millimeters high, inclusive of borders. The Sign's Border shall be at least 50 millimeters wide and 50 millimeters high. The DMS shall support the following Beacon configuration: NONE |

||

2.1.2.3.1 |

Non-Matrix |

0.2 (1) |

Yes / No |

|||

2.1.2.3.2 (Matrix) |

Matrix |

0.2 (1) |

Yes / No |

The pitch between pixels shall be at least 75 millimeters, measured between the center of each pixel. |

||

2.1.2.3.2.1 |

Full Matrix |

0.3(1) |

Yes / No |

The sign shall be 105 pixels wide and 27 pixels high. |

||

2.1.2.3.2.2 |

Line Matrix |

0.3(1) |

Yes / No |

|||

2.1.2.3.2.3 |

Character Matrix |

0.3(1) |

Yes / No |

|||

2.3.2 |

Operational Environment |

M |

Yes |

|||

2.3.2.1 |

Live Data Exchange |

M |

Yes |

|||

3.3.1.1 |

Retrieve Data |

M |

Yes |

|||

3.3.1.2 |

Deliver Data | M | Yes |

|||

3.3.1.3 |

Explore Data |

M |

Yes |

|||

3.3.4.1 |

Determine Current Access Settings |

M |

Yes |

|||

3.3.4.2 |

Configure Access |

M |

Yes |

The DMS shall support at least 128 users in addition to the administrator. |

||

2.3.2.2 |

Logged Data Exchange |

0 |

Yes / No |

|||

D.3.1.2.11 |

Set Time |

0 |

Yes / No |

|||

D.3.1.2.2 |

Set Time Zone |

0 |

Yes / No |

|||

D.3.1.2.3 |

Set Daylight Savings Mode |

0 |

Yes / No |

|||

D.3.1.2.4 |

Verify Current Time |

0 |

Yes / No |

|||

D.3.2.2 |

Supplemental Requirements for Event Monitoring |

0 |

Yes / No |

|||

3.3.2.1 |

Determine Current Configuration of Logging Service |

M |

Yes |

|||

3.3.2.2 |

Configure Logging Service |

M |

Yes |

|||

3.3.2.3 |

Retrieve Logged Data |

M |

Yes |

|||

3.3.2.4 |

Clear Log |

M |

Yes |

|||

3.3.2.5 |

Determine Capabilities of Event Logging Service |

M |

Yes |

|||

3.3.2.6 |

Determine Total Number of Events |

M |

Yes |

|||

2.3.2.3 |

Exceptional Condition Reporting |

0 |

Yes / No |

|||

D.3.1.2.1 |

Set Time |

0 |

Yes / No |

|||

D.3.1.2.2 |

Set Time Zone |

0 |

Yes / No |

|||

D.3.1.2.3 |

Set Daylight Savings Mode |

0 |

Yes / No |

|||

D.3.1.2.4 |

Verify Current Time |

0 |

Yes / No |

|||

D.3.2.2 |

Supplemental Requirements for Event Monitoring |

0 |

Yes / No |

|||

3.3.3.1 |

Determine Current Configuration of Exception Reporting Service |

M |

Yes |

|||

3.3.3.2 |

Configuration of Events |

M |

Yes |

|||

3.3.3.3 |

Automatic Reporting of Events (SNMP Traps) |

M |

Yes |

|||

3.3.3.4 |

Manage Exception Reporting |

M |

Yes |

|||

3.3.3.5 |

Determine Capabilities of Exception Reporting Service |

M |

Yes |

|||

2.4 |

Features |

M |

Yes |

|||

2.4.1 |

Manage the DMS Configuration |

M |

Yes |

|||

2.4.1.1 |

Determine the DMS Identity |

M |

Yes |

|||

3.4.1.1.1 |

Determine Sign Type and Technology |

M |

Yes |

|||

D.3.1.1 |

Determine Device Component Information |

0 |

Yes / No |

|||

D.3.1.4 |

Determine Supported Standards |

0 |

Yes / No |

|||

2.4.1.2 |

Determine Sign Display Capabilities |

0 |

Yes / No |

|||

3.4.1.2.1.1 |

Determine the Size of the Sign Face |

M |

Yes |

|||

3.4.1.2.1.2 |

Determine the Size of the Sign Border |

M |

Yes |

|||

3.4.1.2.1.3 |

Determine Beacon Type |

M |

Yes |

|||

3.4.1.2.1.4 |

Determine Sign Access and Legend |

M |

Yes |

|||

3.4.1.2.2.1 |

Determine Sign Face Size in Pixels |

Matrix: M |

Yes |

|||

3.4.1.2.2.2 |

Determine Character Size in Pixels |

Matrix: M |

Yes |

|||

3.4.1.2.2.3 |

Determine Pixel Spacing |

Matrix: M |

Yes |

|||

3.4.1.2.3.1 |

Determine Maximum Number of Pages |

VMS:M |

Yes |

|||

3.4.1.2.3.2 |

Determine Maximum Message Length |

VMS:M |

Yes |

|||

3.4.1.2.3.3 |

Determine Supported Color Schemes |

VMS:M |

Yes |

|||

3.4.1.2.3.4 |

Determine Message Display Capabilities |

VMS:M |

Yes |

|||

3.4.1.3.1 |

Determine Number of Fonts |

Fonts:M |

Yes |

|||

3.4.1.3.3 |

Determine Supported Characters |

Fonts:M |

Yes |

|||

3.4.1.3.4 |

Retrieve a Font Definition |

Fonts:M |

Yes |

|||

3.4.1.3.7 |

Validate a Font |

Fonts:M |

Yes |

|||

3.4.1.4.1 |

Determine Maximum Number of Graphics |

Graphics:M |

Yes / NA |

|||

3.4.1.4.4 |

Retrieve a Graphic Definition |

Graphics:M |

Yes / NA |

|||

3.4.1.4.7 |

Validate a Graphic |

Graphics:M |

Yes / NA |

|||

3.4.1.4.8 |

Determine Graphic Spacing |

Graphics:M |

Yes / NA |

|||

3.4.2.3.9.1 |

Determine Default Message Display Parameters |

VMS:M |

Yes |

|||

3.4.3.2.1 |

Monitor Information about the Currently Displayed Message |

0 |

Yes / No |

|||

3.4.3.2.2 |

Monitor Dynamic Field Values |

Fields:M |

Yes |

|||

3.5.6 |

Supplemental Requirements for Message Definition |

VMS:M |

Yes |

|||

2.4.1.3 (Fonts) |

Manage Fonts |

VMS:0 |

Yes / No / NA |

|||

3.4.1.3.1 |

Determine Number of Fonts |

M |

Yes |

|||

3.4.1.3.2 |

Determine Maximum Character Size |

M |

Yes |

|||

3.4.1.3.3 |

Determine Supported Characters |

M |

Yes |

|||

3.4.1.3.4 |

Retrieve a Font Definition |

M |

Yes |

|||

3.4.1.3.5 |

Configure a Font |

M |

Yes |

|||

3.4.1.3.6 |

Delete a Font |

M |

Yes |

|||

3.4.1.3.7 |

Validate a Font |

M |

Yes |

|||

3.5.1 |

Supplemental Requirements for Fonts |

M |

Yes |

|||

2.4.1.4 (Graphics) |

Manage Graphics |

VMS:0 |

Yes / No / NA |

VDOT desires to implement the Graphics option on this project. However, the decision to implement Graphics will be based on pricing submitted by bidders to include this functionality. |

||

3.4.1.4.1 |

Determine Maximum Number of Graphics |

M |

Yes |

|||

3.4.1.4.2 |

Determine Maximum Graphic Size |

M |

Yes |

|||

1.1 1 References to clauses in Annex D refer to items that may eventually be moved to NTCIP 1201.

Portion of the Requirements to Test Case Traceability Matrix from a Sample Project

|

Requirement ID |

Requirement Title |

Test Case ID |

Test Case Title |

|---|---|---|---|

3.4 |

Data Exchange Requirements |

||

3.4.1 |

Manage the DMS Configuration |

||

3.4.1.1 |

Identify DMS |

||

3.4.1.1.1 |

Determine Sign Type and Technology |

||

TC1.1 |

Determine Sign Type and Technology |

||

3.4.1.2 |

Determine Message Display Capabilities |

||

3.4.1.2.1 |

Determine Basic Message Display Capabilities |

||

3.4.1.2.1.1 |

Determine the Size of the Sign Face |

||

TC1.2 |

Determine the Size of the Sign Face |

||

3.4.1.2.1.2 |

Determine the Size of the Sign Border |

||

TC1.3 |

Determine Size of the Sign Border |

||

3.4.1.2.1.3 |

Determine Beacon Type |

||

TC1.4 |

Determine Beacon Type |

||

3.4.1.2.1.4 |

Determine Sign Access and Legend |

||

TC1.5 |

Determine Sign Access and Legend |

||

3.4.1.2.2 |

Determine Matrix Capabilities |

||

3.4.1.2.2.1 |

Determine Sign Face Size in Pixels |

||

TC1.6 |

Determine Sign Face Size in Pixels |

||

3.4.1.2.2.2 |

Determine Character Size in Pixels |

||

TC1.7 |

Determine Character Size in Pixels |

||

3.4.1.2.2.3 |

Determine Pixel Spacing |

||

TC1.8 |

Determine Pixel Spacing |

||

3.4.1.2.3 |

Determine VMS Message Display Capabilities |

||

3.4.1.2.3.1 |

Determine Maximum Number of Pages |

||

TC1.9 |

Determine Maximum Number of Pages |

||

3.4.1.2.3.2 |

Determine Maximum Message Length |

||

TC1.10 |

Determine Maximum Message Length |

||

3.4.1.2.3.3 |

Determine Supported Color Schemes |

||

TC1.11 |

Determine Supported Color Schemes |

||

3.4.1.2.3.4 |

Determine Message Display Capabilities |

||

TC1.12 |

Determine Message Display Capabilities |

||

3.4.1.2.4 |

Delete Messages of Message Type |

||

TC7.11 |

Verify Message Deletion by Message Type |

||

3.4.1.3 |

Manage Fonts |

||

3.4.1.3.1 |

Determine Number of Fonts |

||

TC2.1 |

Determine Number of Fonts |

||

3.4.1.3.2 |

Determine Maximum Character Size |

||

TC2.2 |

Determine Maximum Character Size |

||

3.4.1.3.3 |

Determine Supported Characters |

||

TC2.3 |

Determine Supported Characters |

||

3.4.1.3.4 |

Retrieve a Font Definition |

||

TC2.4 |

Retrieve a Font Definition |

||

3.4.1.3.5 |

Configure a Font |

||

TC2.5 |

Configure a Font |

||

TC2.6 |

Attempt to Configure a Font that is In Use |

||

3.4.1.3.6 |

Delete a Font |

||

TC2.7 |

Delete a Font |

||

TC2.8 |

Attempt to Delete a Font that is In Use |

||

3.4.1.3.7 |

Validate a Font |

||

TC2.9 |

Verify Font CRC Change |

||