Module 18 - T313

T313: Applying Your Test Plan to Environmental Sensor Stations (ESS) Based on NTCIP 1204 v04 ESS Standard

HTML of the PowerPoint Presentation

(Note: This document has been converted from a PowerPoint presentation to 508-compliant HTML. The formatting has been adjusted for 508 compliance, but all the original text content is included, plus additional text descriptions for the images, photos and/or diagrams have been provided below.)

Slide 1:

(Extended Text Description: Welcome - Graphic image of introductory slide. A large dark blue rectangle with a wide, light grid pattern at the top half and bands of dark and lighter blue bands below. There is a white square ITS logo box with words "Standards ITS Training - Transit" in green and blue on the middle left side. The word "Welcome" in white is to the right of the logo. Under the logo box is the logo for the U.S. Department of Transpotation, Office of the Assistant Secretary for Research and Technology.)

Slide 2:

(Extended Text Description: This slide, entitled "Welcome" has a photo of Ken Leonard, Director, ITS Joint Program Office, on the left hand side, with his email address, Ken.Leonard@dot.gov. A screen capture snapshot of the home webpage is found on the right hand side - for illustration only - from August 2014. Below this image is a link to the current website: www.its.dot.gov/pcb - this screen capture snapshot shows an example from the Office of the Assistant Secretary for Research and Development - Intelligent Transportation Systems Joint Program Office - ITS Professional Capacity Building Program/Advanced ITS Education. Below the main site banner, it shows the main navigation menu with the following items: About, ITS Training, Knowledge Exchange, Technology Transfer, ITS in Academics, and Media Library. Below the main navigation menu, the page shows various content of the website, including a graphic image of professionals seated in a room during a training program. A text overlay has the text Welcome to ITS Professional Capacity Building. Additional content on the page includes a box entitled What's New and a section labeled Free Training. Again, this image serves for illustration only. The current website link is: https://www.its.dot.gov/pcb.)

Slide 3:

T313:

Applying Your Test Plan to Environmental Sensor Stations (ESS) Based on NTCIP 1204 v04 ESS Standard

(Extended Text Description: Title slide: This slide displays the title of the course, "T313: Applying Your Test Plan to Environmental Sensor Stations (ESS) Based on NTCIP 1204 v04 ESS Standard" with an image of a technician at the control panel of a weather station in the field.)

Slide 4:

Instructor

Kenneth Vaughn, P.E.

President

Trevilon LLC

Magnolia, TX, USA

Slide 5:

Learning Objectives

- Describe the role of test plans and the testing to be undertaken

- Identify key elements of NTCIP 1204 v04 relevant to the test plan

- Describe the application of a good test plan to an ESS system being procured

- Describe the testing of an ESS using standard procedures

Slide 6:

Learning Objective 1

- Describe the role of test plans and the testing to be undertaken

Slide 7:

What Is an Environmental Sensor Station (ESS)?

ESS Capabilities

-

May remotely monitor:

- Wind speed and direction

- Temperature, humidity, and pressure

- Precipitation type and rate

- Snow accumulation

- Visibility

- Pavement conditions

- Radiation

- Water level

- Air quality

-

Can also support:

- Snapshot cameras

- Pavement treatment systems

- "Station" may be mobile

Source: Intelligent Devices, Inc.

Slide 8:

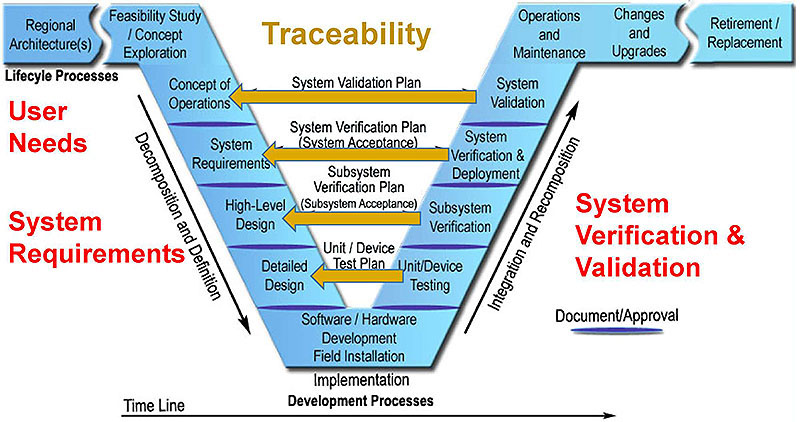

Review System Lifecycle

Testing and the System Lifecycle

(Extended Text Description: Author's relevant description: Testing and the system lifecycle: This slide presents the systems engineering "V" diagram with the right side of the diagram highlighted and the text "Testing to be undertaken" added off to the right side. Key Message: Testing is a key part of the system lifecycle. However, specifying and acquiring a device is only half the job. Just because a device works with the current system does not mean that it is conformant to the standard or that it will work with your next central system. The goal of requiring conformance to a standard is to ensure that the product will interoperate with any conforming system over a prolonged period. This is achieved by following the systems engineering lifecycle. System Validation, System Verification & Deployment, Subsystem Verification and Unit/Device Testing are highlighted in red.)

Slide 9:

Why We Test

To Confirm That the System Will Work as Intended

(Extended Text Description: Author's relevant description: To Confirm that the System will Work as Intended: This slide presents the sytyems engineering "V" diagram. The text "User Needs" and "System Requirements" appear on the left and then the text "System Verification and Validation" appears on the right. Then, each of the connecting arrows across the center are highlighted pointing from right to left indicating that unit/device testing verifies the detailed design, subsystem verification verifies high-level design, system verification and deployment verifies system requirements, and system validation validates the concept of operations. Finally, the word "Traceability" appears on top to indicate that all of the verification tests on the right side should be traceable to elements on the left side of the "V". Key Message: Focus upon the traceability of testing to the underlying requirements and foundational user needs.)

Slide 10:

Why We Test

To Confirm That the System Will Work as Intended

Testing can objectively ensure that a system is:

-

Validated: Solves the right problem

- Satisfies the user needs

-

Verified: Solves the problem right (correctly)

- Satisfies the system requirements as designed

NTCIP standardizes user needs and requirements NTCIP Testing verifies

- Compliance: Supports selected needs and requirements

- Conformance: Implements per standardized design

Slide 11:

Test Documentation

There's a Standard for That!

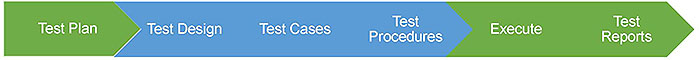

(Extended Text Description: The top of the slide shows a series of progressive arrows that show the following from left to right: "Test Plan", "Test Design", "Test Cases", "Test Procedures", "Execute", and "Test Reports".)

Testing is a general Information Technology need

Topic of IEEE 829-2008

IEEE Standard for Software and System Test Documentation

Slide 12:

ESS Test Plan

Test Plan Overview

(Extended Text Description: The top of the slide shows the same series of progressive arrows that show the following from left to right: "Test Plan", "Test Design", "Test Cases", "Test Procedures", "Execute", and "Test Reports" with the first arrow highlighted in blue.)

A Test Plan answers the key questions

(Extended Text Description: A picture of a pole with road signs indicating direction for various destinations with one of the following questions as the text for each destination: "What?", "Where?", "Who?", "When?", "Why?", and "How?".)

Slide 13:

ESS Test Plan

Who Is Responsible for Testing Tasks?

Different people may:

- Provide items to be tested

- Provide the test facility

- Set up the test environment

- Perform and report on the test

Each requires unique skills and resources

NTCIP Testing may be performed by:

- Agency: May not know NTCIP details

- Vendor: Conflict of interest issues

- 3rd Party: May be difficult to access

Slide 14:

ESS Test Plan

What Items Will Be Tested?

Different test plans will typically be used to test:

- Software modules

- Components

- The system as a whole

NTCIP Testing generally tests one component

NTCIP 1204 testing generally tests either:

- The ESS (the controller and connected sensors), or

- The manager that communicates with the ESS

Slide 15:

ESS Test Plan

What Requirements Will Be Tested?

Different test plans may be used to test:

- Communications

- Functionality

- Performance

- Hardware

- Environmental

NTCIP Testing: testing of communication interface

Test Plan should identify what else will be tested

- Will sensor values be compared against actual conditions?

- Will communication response times be measured?

- Will communications be tested with power outages?

Slide 16:

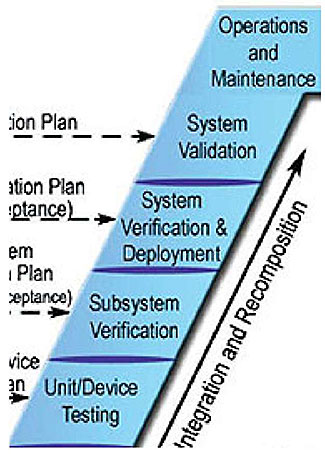

ESS Test Plan

When Will It Be Tested?

(Extended Text Description: Author's relevant description: The left slide of the slide depicts the right side of the systems engineering "V" diagram. Key Message: The test plan should also define when the associated testing will be performed. Testing occurs throughout the right side of the V-diagram, with each stage designed to build the system from smaller tested components. The final round of testing focuses on ensuring that the delivered product fully meets the user needs it was designed to fulfill. Each stage of testing should have its own test plan and may have multiple plans testing different sets of requirements. NTCIP testing is generally performed during subsystem verification, which may include other testing as well (e.g., environmental testing). Subsystem testing (i.e., testing a component like an ESS) occurs after unit testing but before system testing. In the case of ESS, it often occurs on a deployed station due to the desire to collect real sensor data. The deployed device may be a sample device hosted at the manufacturers facility or may be one of the first installations of the device for the project. The test plan should define what preconditions are required for testing to begin. The "when" aspect of the test plan may also include a set of tentative dates so that the various involved parties are able to properly prepare for the test with defined contingencies if the schedule slips. NTCIP testing can also be included in other stages of the project. All of this should be documented in the test plan documents.)

Right side of the V-diagram

Each stage may have one or more test plans

NTCIP testing

- Typically during subsystem verification

- May be included in other stages

Slide 17:

ESS Test Plan

Where Will It Be Tested?

Need to describe the test environment:

- Bench: limited sensor data

- Laboratory: simulated data (price?)

-

Real-world: real data

- Difficult to test limits

- Safety implications?

Location of tester

- Local testing: Lower response times

- Remote testing: Lower costs?

NTCIP 1204 testing may use any

- Trade-offs should be considered

Slide 18:

ESS Test Plan

Why Is It Being Tested?

Verify conformance

Verify compliance (project-specific?) Other practical reasons

-

Requirement for acceptance

- Pay item

- Approval to move to next phase of project

- Troubleshooting

Slide 19:

ESS Test Plan

How Will It Be Tested?

Test plan describes tools required

NTCIP testing uses test software

- Performs role of one component

- Often automates portion of step-by-step procedures

- May be supplemented by data analyzer

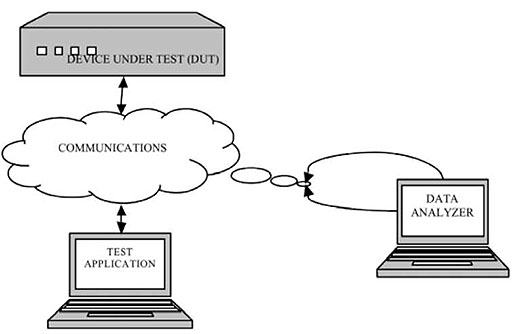

(Extended Text Description: Author's relevant description: the slide displays the main testing environment figure from NTCIP 8007 where a test application is connected to a device under test via a communications cloud that is also connected to a data analyzer.)

Source: NTCIP 8007

Slide 20:

ESS Test Plan

Master Test Plan

(Extended Text Description: The top of the slide shows the same series of progressive arrows as shown on slides 11 and 12 and show the following from left to right: "Test Plan", "Test Design", "Test Cases", "Test Procedures", "Execute", and "Test Reports". The first arrow is highlighted in blue.)

NTCIP Test Plan is a Level Test Plan

Often multiple Level Test Plans in a project

Master Test Plan defines how various Levels fit together

- Purpose of each level test plan

- Order in which they are performed

Slide 21:

ESS Test Documentation

Test Design / Test Case / Test Procedure

(Extended Text Description: The top of the slide shows the same series of progressive arrows as shown on slides 11, 12, and 20 and show the following from left to right: "Test Plan", "Test Design", "Test Cases", "Test Procedures", "Execute", and "Test Reports". The second through fourth arrows are highlighted in blue.)

(Extended Text Description: The main body of the slide shows three blue boxes. The box on the left is labeled "Test Design" and has bullet points of "Maps features to be tested to test cases" and "Makes any refinements to test approach". This box has an arrow pointing to a second box to the upper right, which is labeled "Test Cases" and has bullet points of "Inputs", "Outputs", and "Refinements". This box has an arrow pointing to a third box in the lower right that is labeled "Test Procedures" and has a single bullet point of "Step-by-step instructions".)

Standardized in Annex C of NTCIP

1204 v04

- Reduces effort to customize

Customized in your test plan

- Specify which requirements will be tested

Slide 22:

Slide 23:

Question

Which of the following most accurately describes a benefit of having standardized NTCIP test documentation included in NTCIP 1204 v04?

Answer Choices

- Eliminates the need for customized test documentation

- Reduces the effort to customize test documentation

- Ensures that all devices conform to the standard

- Eliminates the need for additional tools to perform testing

Slide 24:

Review of Answers

a) Eliminates the need for customized test documentation

a) Eliminates the need for customized test documentation

Incorrect. Test plans are still needed to customize testing to each specific project.

b) Reduces the effort to customize test documentation

b) Reduces the effort to customize test documentation

Correct! Most of the documentation has been standardized.

c) Ensures that all devices conform to the standard

c) Ensures that all devices conform to the standard

Incorrect. Each device still needs to be tested to verify conformance.

d) Eliminates the need for additional tools to perform testing

d) Eliminates the need for additional tools to perform testing

Incorrect. Testing will still rely on tools to communicate with the device under test.

Slide 25:

Learning Objectives

- Describe the role of test plans and the testing to be undertaken

- Identify key elements of NTCIP 1204 v04 relevant to the test plan

Slide 26:

Learning Objective 2

- Identify key elements of NTCIP 1204 v04 relevant to the test plan

Slide 27:

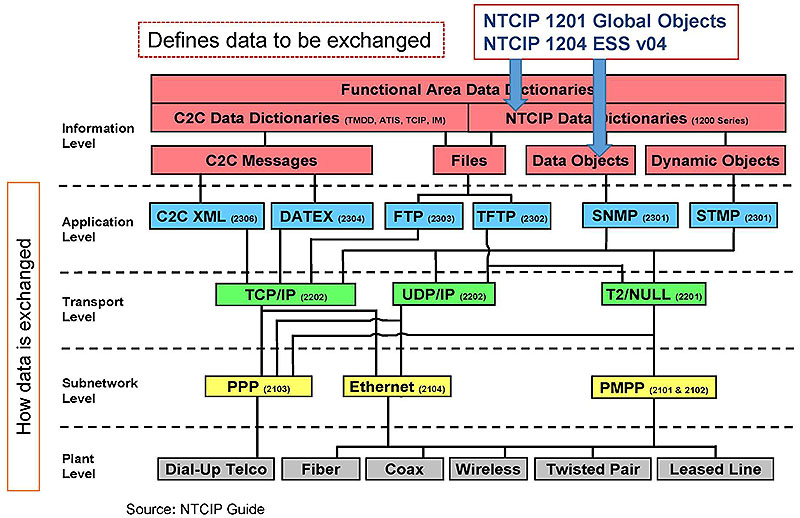

Relationship Among NTCIP Standards

(Extended Text Description: Relationship Among NTCIP Standards: This slide depicts how the various NTCIP standards relate to one another using a figure from the NTCIP Guide. At the top of the figure covering the whole width of the diagram is a box labeled Functional Area Data Dictionaries. Immediately underneath this box are two boxes; each covering 50% of the width. The one on the left is labeled "C2C Data Dictionaries (TMDD, ATIS, TCIP, IM)" and the one on the right is labeled "NTCIP Data Dictionaries (1200 series)". The left-hand box has two lines coming down from it, each into a box. One is labeled "C2C Messages" and the other is labeled "Files". The right-hand box, has three lines coming down; one goes to the same "Files" box used by the C2C Messages while the other two go to boxes labeled "Data Objects" and "Dynamic Objects". Overlayed on this diagram is a box with the text "NTCIP 1201 Global Objects, NTCIP 1204 ESS v04" with arrows indicating that these documents are NTCIP Data Dictionaries and define Data Objects. This area of the diagram is labeled "Information Level" and is denoted as "Defines data to be exchanged". The lower portion of the diagram is labeled "How data is exchanged" and is initially blank but the options are unveiled when the presenter clicks the mouse. When this occurs, the lowest-tier Information Level boxes are connected to a communications stack. The next Level down is called the "Application Level". The "C2C Messages Box" is connected to two lower boxes labeled "C2C XML" and "DATEX". The "Files" box is connected to "FTP" and "TFTP". The "Data Objects" box is connected to "SNMP" and the "Dynamic Objects" box is connected to "STMP". The next layer down is the "Transport Level". The "C2C XML", "DATEX", "FTP", "SNMP", and "STMP" boxes are all connected to "TCP/IP", in addition, the "TFTP", "SNMP", and "STMP" boxes are connected to boxes labeled "UDP/IP" and "T2/Null". The next layer down is the "Subnetwork Level". All three transport options are connected to the "PPP" box. "Ethernet" is connected to both "IP" options. And "PMPP" is connected to the "T2/Null" box. The next layer down is the "Plant Level". This shows "PPP" working over "Dial-up Telco" and shows "Ethernet" and "PMPP" working over "Fiber", "Coax", "Wireless", "Twisted Pair", and "Leased Line".)

Source: NTCIP Guide

Slide 28:

Structure of NTCIP 1204 v04

NTCIP 1204 v04 Outline (Body)

- General

- Concept of Operations

- Functional Requirements

- Dialogs

- Management Information Base

Slide 29:

Structure of NTCIP 1204 v04

NTCIP 1204 v04 Outline (Annexes)

- Requirements Traceability Matrix

- Object Tree

- Test Procedures

- Documentation of Revisions

- User Requests

- Generic Clauses

- SNMP Interface

- Controller Configuration Objects

Slide 30:

Elements Related to Testing

NTCIP 1204 v04 Outline

(Extended Text Description: The left side of this slide maps the sections of the standard shown on the right into the categories relevant to testing as follows: 1. The user needs and PRL are defined in Section 2: Concept of Operations 2. The requirements are defined in Section 3: Functional Requirements 3. The test design, test cases, and procedures are defined in Annex C: Test Procedures 4. The design is defined in Sections 4 and 5, Dialogs and Management Information Base 5. The RTM is defined in Annex A: Requirements Traceability Matrix The bulleted items are listed below:

- General

- Concept of Operations

- Functional Requirements

- Dialogs

- Management Information Base

- Requirements Traceability Matrix

- Object Tree

- Test Procedures

- Documentation of Revisions

- User Requests

- Generic Clauses

- SNMP Interface

- Controller Configuration Objects

)

Slide 31:

Elements Related to Testing

Test Plan

Specific for each project

Outline defined by IEEE 829-2008

- Example in Student Supplement

Features to be tested based on PRL

- Section 2 of NTCIP 1204 v04

Slide 32:

Elements Related to Testing

PRL (Section 2)

(Extended Text Description: The upper portion of the slide depicts a portion of the PRL table for the "Monitor Atmospheric Pressure" user need. As the presenter talks, portions of the table are highlighted depicting the portion being talked about, including: User Need ID and User Need (title), FR ID and Functional Requirement (title), Conformance, and Support. When the support column is highlighted, the selections made by a sample project are also selected in the Support column by selecting "Yes", "No", or "N/A" on each line. The full text of the table is below:

| Protocol Requirements List (PRL) | |||||

| User Need ID | User Need | FR ID | Functional Requirement | Conformance | Support |

|---|---|---|---|---|---|

| 2.5.2.1.1 | Monitor Atmospheric Pressure | 0.5 (1..*) | Yes / No / NA | ||

| 3.5.2.1.10.1 (PressLoc) | Retrieve Atmospheric Pressure Metadata - Location | 0 | Yes / No / NA | ||

| 3.5.2.1.10.2 | Fletrieve Atmospheric Pressure Metadata - Sensor Information | 0 | Yes / No / NA | ||

| 3.5.2.1.10.3 | Configure Atmospheric Pressure Metadata - Location | PressLoc:0 | Yes / NA | ||

| 3.5.2.3.2.10 | Retrieve Atmospheric Pressure | M | Yes / NA | ||

| 3.6.1 | Required Number of Atmospheric Pressure Sensors | M | Yes / NA | ||

)

-

Agency completes the PRL for each project

- Identifies the specific requirements that must be supported

- All selected requirements should be tested at some point

Slide 33:

Elements Related to Testing

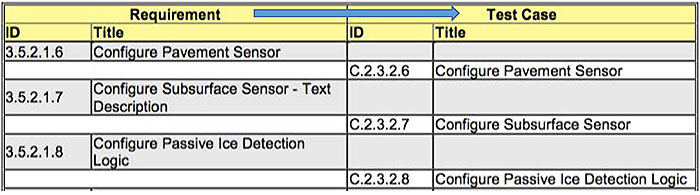

Test Design Specification (Annex C)

(Extended Text Description: The upper portion of the slide displays a portion of the Test Case Traceability Matrix showing how requirements are traced to test cases. The full text of the table is listed below:

| Requirement | Test Case | ||

|---|---|---|---|

| ID | Title | ID | Title |

| 3.5.2.1.8 | Configure Pavement Sensor | ||

| C.2.3.2.6 | Configure Pavement Sensor | ||

| 3.5.2.17 | Configure Subsurface Sensor - Text Description | ||

| C.2.3.2.7 | Configure Subsurface Sensor | ||

| 3.5.2.1.8 | Configure Passive Ice Detection Logic | ||

| C.2.3.2.8 | Configure Passive Ice Detection Logic | ||

)

- Format conforms to NTCIP 8007 (See Module T202)

- Standard traces requirements to test cases

- If requirement is selected in PRL, each traced test case should be performed

- Project test plan should reference the table and note any exceptions taken

Slide 34:

Elements Related to Testing

Test Case Specification (Annex C)

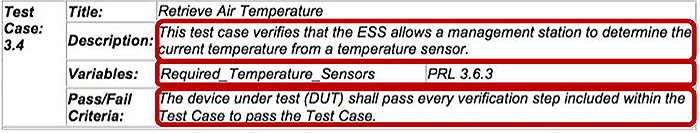

(Extended Text Description: The table content of Description, Variables, and Pass/Fail Criteria are highlighted successively. The full text of the table is below:

| Test Case: 3.4 | Title: | Retrieve Air Temperature | |

| Description: | This test case verifies that the ESS allows a management station to determine the current temperature from a temperature sensor. | ||

| Variables: | Required_ TemperatureSensors | PRL 3.6.3 | |

| Pass/Fail Criteria: | The device under test (OUT) shaft pass every verification step included within the Test Case to pass the Test Case, | ||

)

Standard defines each test case with inputs and outputs

Project NTCIP Test Plan should:

- Reference these definitions

-

Identify the input values that will be used for the tests

- E.g., How many temperature sensors are required?

Slide 35:

Elements Related to Testing

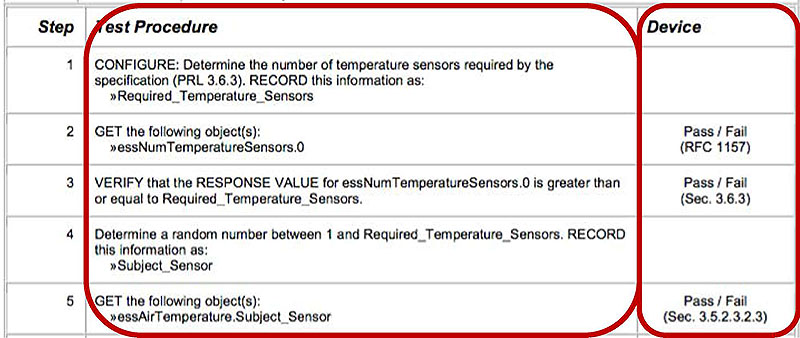

Test Procedures (Annex C)

(Extended Text Description: The slide contains a table (shown below) with the columns Test Procedure and Deveice highlighted successively. The full text of the table is below:

| Step | Test Procedure | Device |

|---|---|---|

| 1 |

CONFIGURE: Determine tha number of temperature sensors required hv the specification (PRL 3.6.3). RECORD this Information as: »Required_Temperature_Sensors |

|

| 2 |

GET the following objects): »essNumTemparatureSensors.0 |

Pass / Fail (RFC 1157) |

| 3 | VERIFY that the RESPONSE VALUE for essNumTemperalureSensors.0 is greater than or equal la Required Temperature Sensors. | Pass / Fail (Sec. 3.6.3) |

| 4 |

Determine a random number between T and Requined_TemperHture..Seneore. RECORD this information as: »Subject_Sensor |

|

| 5 |

GET the following objects): »essAirTernperature.Subject_Sensor |

Pass / Fail (Sec 3.6.2.3.2.3) |

)

- Standard defines test procedures for each test case and indicates the requirements tested at specific points

- Project NTCIP Test Plan should reference the procedures

Slide 36:

Elements Related to Testing

(Extended Text Description: The top of the slide shows the same series of progressive arrows as shown on slides 11, 12, 20, and 21 and show the following from left to right: "Test Plan", "Test Design", "Test Cases", "Test Procedures", "Execute", and "Test Reports". The first arrow is green indicating it was discussed in Learning Objective 1. The second through fourth arrows are highlighted in blue to indicate that they were discussed in Learning Objective 2. The fifth and sixth arrows are shown in grey to indicate that they are discussed as a part of Learning Objective 4.)

Test preparation documentation is defined by properly linking

- Project-specific test plan to...

- NTCIP 1204 v04

Slide 37:

Slide 38:

Question

Which statement most closely describes the documentation that a project should prepare before conducting NTCIP 1204 v04 testing?

Answer Choices

- Just reference Annex C of NTCIP 1204 v04

- Develop a test plan with appropriate additions to link to NTCIP 1204 v04

- Develop a test plan and set of test procedures with appropriate additions to link to NTCIP 1204 v04

- Develop all documents defined by IEEE 829-2008

Slide 39:

Review of Answers

a) Just reference Annex C of NTCIP 1204 v04

a) Just reference Annex C of NTCIP 1204 v04

Incorrect. Annex C does not define project-specific details such as when, what, who, where, how, and why.

b) Develop a test plan with links to NTCIP 1204 v04

b) Develop a test plan with links to NTCIP 1204 v04

Correct! Most of the documentation is done; you just customize to your project with a test plan with some links.

c) Develop a test plan and set of test procedures with links

c) Develop a test plan and set of test procedures with links

Incorrect. The test design specification is already defined in the Requirements to Test Case Traceability Matrix.

d) Develop all documents defined by IEEE 829-2008

d) Develop all documents defined by IEEE 829-2008

Incorrect. Most of this documentation has been standardized.

Slide 40:

Learning Objectives

- Describe the role of test plans and the testing to be undertaken

- Identify key elements of NTCIP 1204 v04 relevant to the test plan

- Describe the application of a good test plan to an ESS system being procured

Slide 41:

Learning Objective 3

- Describe the application of a good test plan to an ESS system being procured

Slide 42:

Example ESS Site

Typical ESS

- NTCIP 1204 v04 has mandatory and optional user needs

-

A typical ESS might include:

- Wind sensor

- Temperature sensor

- Humidity sensor

- Air pressure sensor

- Precipitation sensor

- Multiple pavement sensors

- Multiple subsurface sensors

- Camera

Slide 43:

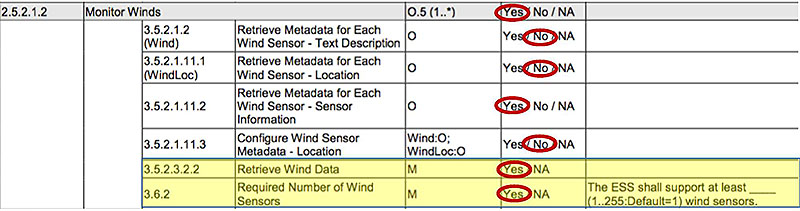

Other Modules That Assist in Defining Requirements

Sample PRL Selections for Site

(Extended Text Description: Sample PRL Selections for Site: This slide includes a section of the PRL from the standard dealing with the "Monitor Winds" use case with the following selections made:

- Monitor Winds user need: Yes

- Retrieve Metadata for each Wind Sensor – Text Description: No

- Retrieve Metadata for each Wind Sensor – Location: No

- Retrieve Metadata for each Wind Sensor – Sensor Information: Yes

- Configure Wind Sensor Metadata – Location: No

- Retrieve Wind Data: Yes

- Required Number of Wind Sensors: Yes (no number given)

The full text of the table is below:

| 2.5.2.1.2 | Monitor Wrids | 0.5 (1..*) | Yes / No / NA | |||

| 3.5.2.1.2 (Wind) | Retrieve Metadata for Each Wind Sensor - Text Description | 0 | Yes / No / NA | |||

| 3.5.2.1.11.1 (WindLoc) | Retrieve Metadata fear Eac;l" Wind Sensor - Location | 0 | Yes / No / NA | |||

| 3.5.2.1.11.2 | Retrieve Metadata for Each Wind Sensor - Sensor Information | 0 | Yes / No / NA | |||

| 3.5.2.1.11.3 | Configure Wind Sensor Metadata - Location | Wind:0; WindLoc0 | Yes / No / NA | |||

| 3.5.2.3.2.2 | Retrieve Wind Data | M | Yes / NA | |||

| 3.6.2 | Required Number of Wind Sensors | M | Yes / NA | The ESS shall support at least _____ (1. .255: Default=1) wind sensors. | ||

)

- Each user need has optional and mandatory requirements

- PRL allows user to select standardized requirements from list using standardized rules

- Modules A313a and A313b provide more information on PRL

- Student Supplement contains a complete PRL

Slide 44:

Understanding Test Case Traceability

Test Design Specification

(Extended Text Description: This slide shows a sample of the Requirement to Test Case Traceability Table that maps the "Retrieve Wind Data" Requirement to the "Retrieve Wind Data" test case with an arrow depicting the traceability. The full table is shown below:

| Requirement | Test Case | ||

|---|---|---|---|

| ID | Title | ID | Title |

| 3.5.2.3.2.2 | Retrieve Wind Data | ||

| C.2.3.3.3 | Retrieve Wind Data | ||

)

Requirements to Test Case Traceability Table

- Contained in NTCIP 1204 v04 Annex C Clause C.2.2

- Identifies test cases for each requirement

Slide 45:

Understanding Test Case Traceability

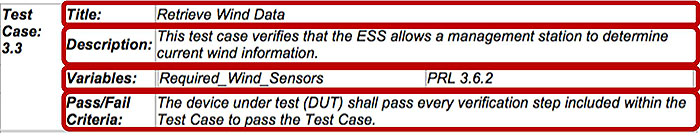

Test Case Specification

(Extended Text Description: This slide contains a table with each row highlighted successively. The full table is shown below:

| Test Case: 3.3 | Titte: | Retrieve Wind Data | |

| Description: | This test case verifies that the ESS allows a management station to determine current wind information. | ||

| Variables: | Required_ Wind_ Sensors | PRL 3.6.2 | |

| Pass/Fail Criteria: | The device under test (DUT) shall pass every verification step included within the Test Case to pass the Test Case. | ||

)

Clause C.2.3.3.3 of NTCIP 1204 v04

- Defines the test case with inputs (variables) and outputs (criteria)

-

In order to perform the test, we need to know the number of sensors

- Sample PRL for Requirement 3.6.2 defines this to be 1

- Test procedures are shown immediately under this description

Slide 46:

Create a Test Plan for ESS

Contents of an NTCIP Test Plan

Complete draft test plan in Student Supplement

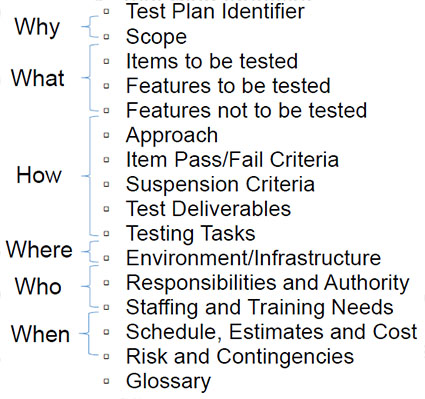

(Extended Text Description: The main body of the slide includes a listing of the sections of a test plan document and labels the "identifier" and "scope" as "Why?"; the "items to be tested", "features to be tested", and "features not to be tested" as "What"; the "approach", "item pass/fail criteria", "suspension criteria", "test deliverables", and "testing tasks" as "How"; the "environment/infrastructure" as "Where"; the "responsibilities and authority" and "staffing and training needs" as "Who"; and the "schedule, risks and costs" and "risk and contingencies" as "When". The full bulleted list is shown below:

Why

- Test Plan Identifier

- Scope

What

- Items to be tested

- Features to be tested

- Features not to be tested

How

- Approach

- Item Pass/Fail Criteria

- Suspension Criteria

- Test Deliverables

- Testing Tasks

Where

- Environment/Infrastructure

Who

- Responsibilities and Authority

- Staffing and Training Needs

When

- Schedule, Estimates and Cost

- Risk and Contingencies

- Glossary

)

(Extended Text Description: A picture of a pole with road signs indicating direction for various destinations with one of the following questions as the text for each destination: "What?", "Where?", "Who?", "When?", "Why?", and "How?".)

Slide 47:

Create a Test Plan for ESS

Why

Objectives

- What is the primary purpose of the test

- What happens upon successful completion

Project Background

- Allows reader to understand the context of the test

Scope

- Explain that this will be an NTCIP test

References

Slide 48:

Create a Test Plan for ESS

What

Items to be tested

- Identify the device that will be tested

Features to be tested

- Identify the requirements that will be tested

Features not to be tested

- Explain the limitations of the testing

Slide 49:

Create a Test Plan for ESS

How

Approach

- Define inputs (variables)

- What happens if there is a failure (regression)

Item Pass/Fail Criteria (Outputs)

- Identify what constitutes a failure

Suspension Criteria

- Identify restrictions on stopping and starting tests

Test Deliverables

- What deliverables will be produced

Testing Tasks

- What tasks need to be done

Procedures defined separately

Slide 50:

Create a Test Plan for ESS

Where

Environment/Infrastructure

-

How will equipment be connected

- Remote, local, combination

-

Other needs

- Tables

- Chairs

- Protection from elements

- Power

Slide 51:

Create a Test Plan for ESS

Who

Identify who is responsible for what

Identify level of effort needed

Slide 52:

Create a Test Plan for ESS

When

Schedule

How is schedule impacted if things go wrong

Slide 53:

Slide 54:

Question

Which of the below is not included in a test plan?

Answer Choices

- Identification of who will perform the testing

- Identification of which features will be tested

- Identification of the reason for the test

- Identification of the steps used to test the device

Slide 55:

Review of Answers

a) Identification of who will perform the testing

a) Identification of who will perform the testing

Incorrect. The test plan should identify who is responsible for testing.

b) Identification of which features will be tested

b) Identification of which features will be tested

Incorrect. The test plan should identify which features will be tested.

c) Identification of the reason for the test

c) Identification of the reason for the test

Incorrect. The test plan should identify the reason the test is being planned.

d) Identification of the steps used to test the device

d) Identification of the steps used to test the device

Correct! The test procedures are defined in a separate document.

Slide 56:

Learning Objectives

- Describe the role of test plans and the testing to be undertaken

- Identify key elements of NTCIP 1204 v04 relevant to the test plan

- Describe the application of a good test plan to an ESS system being procured

- Describe the testing of an ESS using standard procedures

Slide 57:

Learning Objective 4

- Describe the testing of an ESS using standard procedures

Slide 58:

Explain a Sample Test Procedure

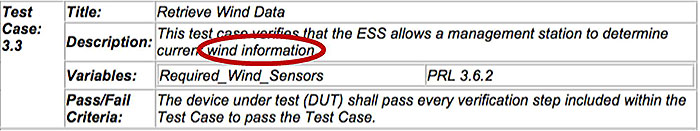

Test Case Specification

(Extended Text Description: This slide shows the test case specification for "Retrieve Wind Information" and overlays an oval on top of the text "wind information" in the description. The full table is shown below:

| Test Case: 3.3 | Title: | Retrieve Wind Data | |

| Description: | This test case verifies that the ESS allows a management station to determine current wind information. | ||

| Varieties: | Required_ Wind_ Sensors | PRL 3.6.2 | |

| Pass/Fail Criteria: | The device under test (DUT) shall pass every verification step included within the Test Case to pass the Test Case, | ||

)

- What is "wind information"?

Slide 59:

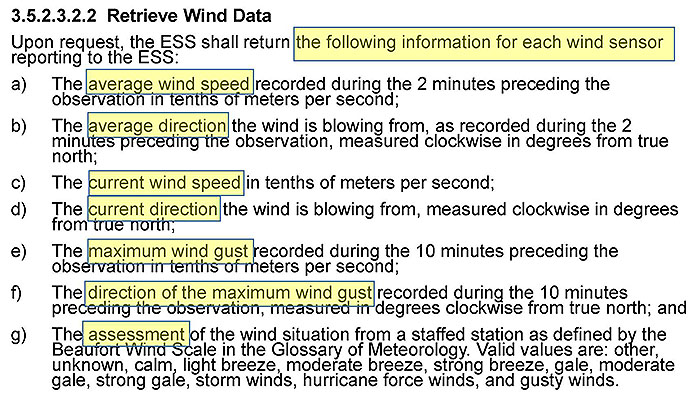

Explain a Sample Test Procedure

Requirement

(Extended Text Description: This slide shows the text from the "Retrieve Wind Information" requirement and highlights text within the definition that explicitly defines what information is to be provided, which includes: average wind speed, average direction, current wind speed, current direction, maximum wind gust, direction of the maximum wind gust, and the assessment of the wind situation. The full text of the slide is shown below:

3.5.2.3.2.2 Retrieve Wind Data

Upon request, the ESS shall return the following information for each wind sensor reporting to the ESS:

- The average wind speed recorded during the 2 minutes preceding the observation in tenths of meters per second;

- The average direction the wind is blowing from, as recorded during the 2 minutes preceding the observation, measured clockwise in degrees from true north;

- The current wind speed in tenths of meters per second;

- The current direction the wind is blowing from, measured clockwise in degrees from true north;

- The maximum wind gust recorded during the 10 minutes preceding the observation in tenths of meters per second;

- The direction of the maximum wind gust recorded during the 10 minutes preceding the observation, measured in degrees clockwise from true north; and

- The assessment of the wind situation from a staffed station as defined by the Beaufort Wind Scale in the Glossary of Meteorology. Valid values are: other, unknown, calm, light breeze, moderate breeze, strong breeze, gale, moderate gale, strong gale, storm winds, hurricane force winds, and gusty winds.

)

Slide 60:

Explain a Sample Test Procedure

Overview

Procedures are defined in Annex C of standard

- Saves agencies from having to develop their own

- Allows for off-the-shelf automation of testing

Sample is "Test Case C.2.3.3.3 Retrieve Wind Data" used in the previous example

Slide 61:

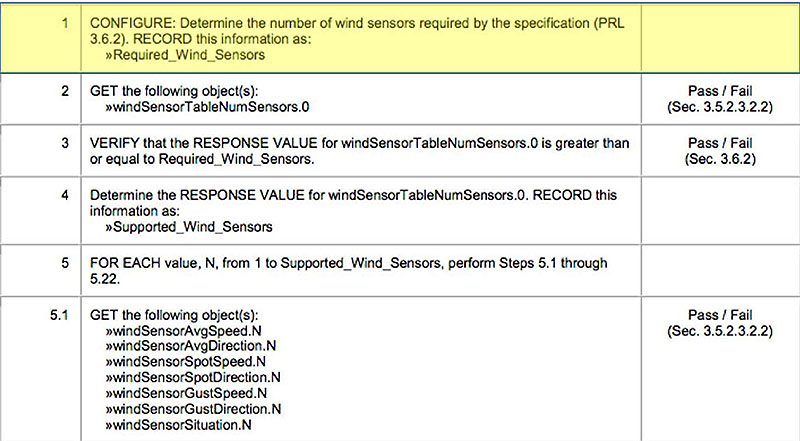

Explain a Sample Test Procedure

Retrieve Wind Data

(Extended Text Description: The slide displays the first 6 steps (labeled 1 through 5.1) of the Retrieve Wind Information test procedure and highlights the first step (Step 1). The full text is shown below:

| 1 |

CONFIGURE: Determine the number of wind sensors required by the specification (PRL 3.6.2). RECORD this information as. »Required_Wind_Sensors |

|

| 2 |

GET the following objects): »windSensorTabieNumSensors.0 |

Pass / Fail (Sec. 3.5.2.3.2.2) |

| 3 | VERIFY that the RESPONSE VALUE For vvindSensorTableNumSensors.0 is greater than or equal to Required_Wind_Sensors. | Pass / Fail (Sec. 3.5.2) |

| 4 |

Determine the RESPONSE VALUE forwindSensorTableNurnSensors.0. RECORD this information as: »Supported_Wind_Sensors |

|

| 5 | FOR EACH value, N, from 1 to Supported Wind Sensors, perform Steps 5,1 through 5.22. | |

| 5.1 |

GET the following object(s): »wiridSensorAvgSpeed.N »windSensorAvgDirection.N »windSensorSpotSpeed.N »windSensorSpotDirection. N »windSensorGustSpeed.N »windSensorGustDirection.N »windSensorSiluation.N |

Pass / Fail (Sec. 3.5.2.3.2.2) |

)

Slide 62:

Explain a Sample Test Procedure

Retrieve Wind Data

(Extended Text Description: Retrieve Wind Data: The slide displays the first 6 steps (labeled 1 through 5.1) of the Retrieve Wind Information test procedure and highlights the second step (Step 2). Please see slide 61 for the complete table.)

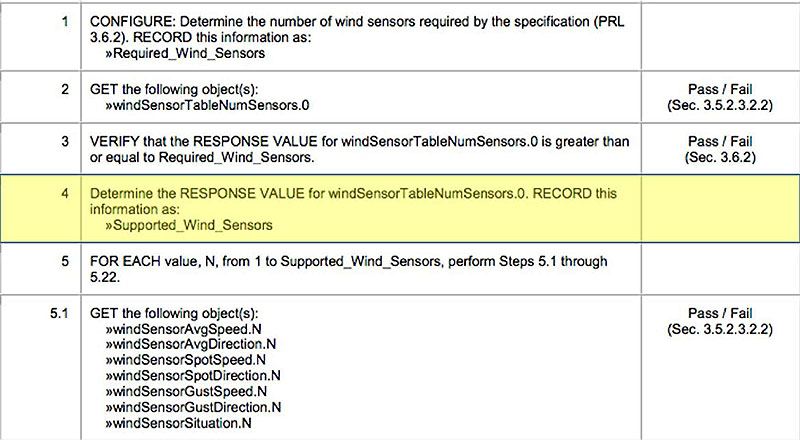

Slide 63:

Explain a Sample Test Procedure

Retrieve Wind Data

(Extended Text Description: Retrieve Wind Data: The slide displays the first 6 steps (labeled 1 through 5.1) of the Retrieve Wind Information test procedure and highlights the third step (Step 3). Please see slide 61 for the complete table.)

Slide 64:

Explain a Sample Test Procedure

Retrieve Wind Data

(Extended Text Description: Retrieve Wind Data: The slide displays the first 6 steps (labeled 1 through 5.1) of the Retrieve Wind Information test procedure and highlights the fourth step (Step 4). Please see slide 61 for the complete table.)

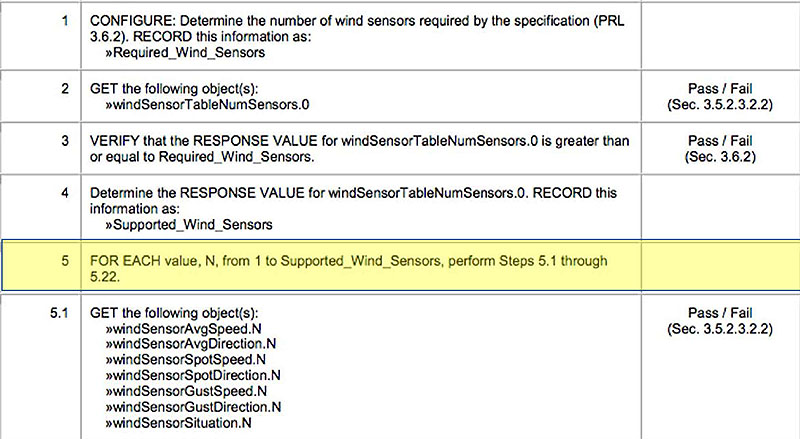

Slide 65:

Explain a Sample Test Procedure

Retrieve Wind Data

(Extended Text Description: Retrieve Wind Data: The slide displays the first 6 steps (labeled 1 through 5.1) of the Retrieve Wind Information test procedure and highlights the fifth step (Step 5). Please see slide 61 for the complete table.)

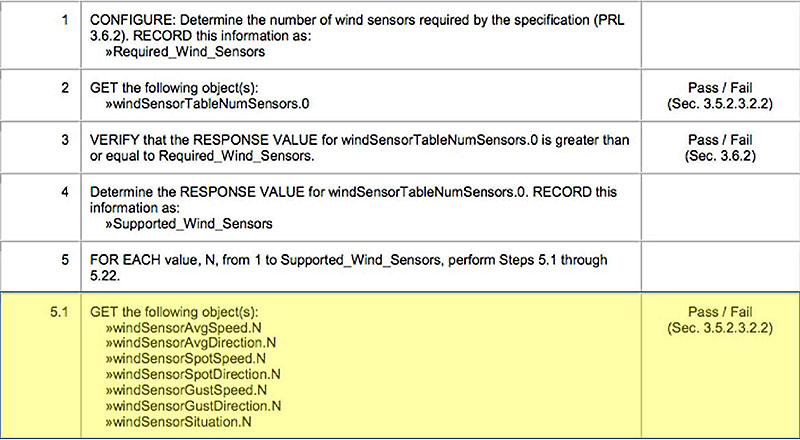

Slide 66:

Explain a Sample Test Procedure

Retrieve Wind Data

(Extended Text Description: Retrieve Wind Data: The slide displays the first 6 steps (labeled 1 through 5.1) of the Retrieve Wind Information test procedure and highlights the sixth step (Step 5.1). Please see slide 61 for the complete table.)

Slide 67:

Explain a Sample Test Procedure

Retrieve Wind Data

| 5.2 | VERIFY thai the RESPONSE VALUE for windSensorAvgSpeed.N is greater than or equal to 0. | Pass / Fail (Sec. 5 6.10.4) |

| 5.3 | VERIFY that the RESPONSE VALUE for windSensorAvgSpeed.N is less than or equal to 65535. | Pass / Fail (Sec. 5,6.10.4) |

| 5.4 | VERIFY that the RESPONSE VALUE for windSensorAvgSpeed.N is APPROPRIATE. | Pass / Fail (Sec. 5.6.10.4) |

Some VERIFY steps can be easily automated

Others require human interaction

Slide 68:

Other Types of Test Steps

Other Step Types in NTCIP 1204 v04

DELAY <for a period of time>

PERFORM <another test procedure>

SET <one or more objects to defined values>

IF <condition> <true branch> ELSE <false branch>

Slide 69:

Analyze and Record Test Results

Reported Failures

Errors can be from a number of sources

- Errors in the implementation

-

User Errors:

- Incorrectly configured inputs at start of test

- Incorrectly evaluating a test step

- Equipment malfunction

- Errors in the procedure

- Errors in the standard

Maturity of standards reduce the risks in last two areas

Once an error is identified

- Investigate and if valid, report the issue

Slide 70:

Benefits of Automated Testing

Automation Is Essential

Automation can dramatically accelerate the testing process

Reduces probability of errors in testing

- A new source of potential error

- But reduces potential for user error

- Correct once and reuse

Some steps still require manual verification

Slide 71:

Slide 72:

Question

Which of the below is not a type of step used in NTCIP 1204 v04 testing?

Answer Choices

- UPDATE

- SET

- VERIFY

- IF

Slide 73:

Review of Answers

a) UPDATE

a) UPDATE

Correct! There is no definition for "UPDATE" in NTCIP 1204 v04 testing.

b) SET

b) SET

Incorrect. A SET request can be used to alter the value of a parameter in the ESS.

c) VERIFY

c) VERIFY

Incorrect. A VERIFY step can be used to ensure that the device is responding properly.

d) IF

d) IF

Incorrect. An IF step can be used to branch the procedure logic based on the evaluation of a condition.

Slide 74:

Module Summary

- Describe the role of test plans and the testing to be undertaken

- Identify key elements of NTCIP 1204 v04 relevant to the test plan

- Describe the application of a good test plan to an ESS system being procured

- Describe the testing of an ESS using standard procedures

Slide 75:

We Have Now Completed the ESS Curriculum

Module 11: A313a:

Module 11: A313a:

Understanding User Needs for ESS Systems Based on NTCIP 1204 v04 Standard

Module 15: A313b:

Module 15: A313b:

Specifying Requirements for ESS Systems Based on NTCIP 1204 v04 Standard

Module 18: T313:

Module 18: T313:

Applying Your Test Plan to ESS Based on NTCIP 1204 v04 ESS Standard

Slide 76:

Thank you for completing this module.

Feedback

Please use the Feedback link below to provide us with your thoughts and comments about the value of the training.

Thank you!