ITS ePrimer

Module 4: ITS Data in Decision Making

Authored by Vaishali Shah, Severna Park, MD, USA

2021

Table of Contents

Purpose

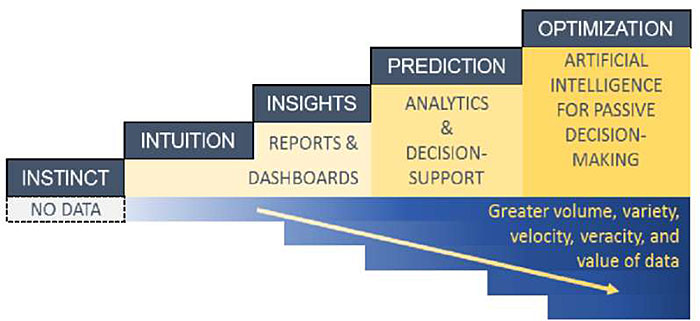

The purpose of this module is to introduce readers to the fundamentals related to collecting, storing, analyzing, and sharing Intelligent Transportation Systems (ITS) data to manage performance and improve decision making. Transportation agencies are inundated with roadside sensor-based data, crowdsourced and internet of everything (IoE) data, connected vehicle data, and transportation-adjacent data. Modern methods for management, integration, and application of these data can help transportation agencies evolve from experience and insight-based decision making to data-informed, predictive, optimized, and even passive decision making. This module sets the context for how real-time and archived data can improve transportation systems management and operations and broader transportation enterprise-level decision making.

Return to top ↑

Objectives

This module has the following objectives:

- Identify the sources and content of ITS and associated data

- Articulate how ITS and related data applications can inform and improve enterprise, program, and real-time processes and decisions

- Recognize that the techniques and tools transforming data are evolving, enabling individuals to access information as and when needed, rather than waiting for an annual or monthly report

- Introduce the principles for modern data managesment and governance and why both are essential to deriving the most value from data

Return to top ↑

1. Introduction

ITS brought forth real-time data to monitor and operate transportation systems through fixed field devices (e.g., traffic detectors, automatic vehicle identification, Bluetooth sensors, environmental sensors, cameras) as early as the 1960s, with the first traffic management center (TMC), loop detectors, and dynamic message signs (DMS). Nearly four decades later, ITS sensor technologies have evolved and emerged as the staple for most agencies’ traffic operations through advances in communications and mapping. In the 2010’s, greater volumes, variety, and velocity of data emerged from mobile field devices, crowdsourced technologies, connected vehicles, and other technologies (e.g., traffic signal performance, automated vehicle location, mobile friction sensors, mobile cameras, smartphone applications, vehicle probes aggregators, social media, smart cones). When effectively integrated, analyzed, and shared, these varied data help agencies improve traffic operations, systems maintenance, traffic safety, operator workload, and environmental footprint.

To be clear, a single data source can benefit multiple aspects of operations, and conversely, the integration of multiple data sources can transform a specific aspect of road or transit operations. In the transportation industry, we speak of data-driven, data-informed, data-centered, or performance-based decision making. For this module, we will use the term “data-informed decision making” as the encompassing suite of technologies, applications, and practices for the collection, integration, analysis, and presentation of data to help individuals and groups make decisions with greater confidence, accuracy, precision, simplicity, speed, timeliness, and impact.

The following defines the temporal component of data: Real-time data reflects information that is typically updated in under five minutes, ideally with only a few minutes of temporal lag from data collection to visualization. Near real-time data may be updated in the range of five to 30 minutes with temporal lags of up to one hour. Real-time decisions are based on real-time data and typically entail a quick response within a minute or a few minutes. Similar near real-time decisions are based on near real-time data. These decisions will typically have greater time between observing the data and the response to the data. The following three examples of data and how they inform decision making are offered as a preview:

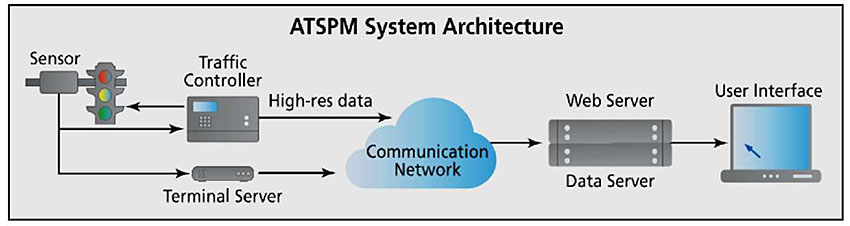

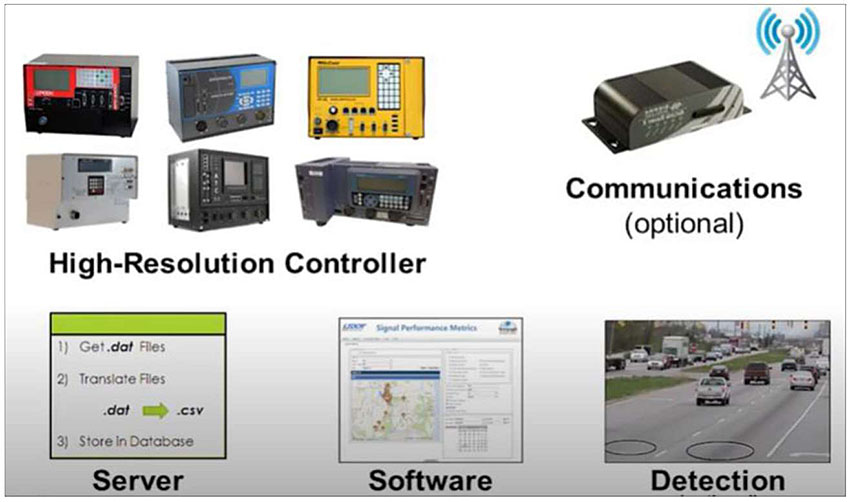

- Automated Traffic Signal Performance Measures (ATSPM) - High-resolution controller event data are now accessible to transportation systems operators for monitoring and improving signal system health, quality of control (e.g., capacity allocation or preemption), and quality of progression (e.g., corridor optimization). The Utah Department of Transportation (UDOT) collects ATSPM at 96 percent of its 1,233 traffic signals using their in-house Department of Technology Services (IT). They estimate an ATSPM implementation cost of approximately $230K. Comparatively, the annual traffic signal program operation and maintenance budget is $4.9M. ATSPM systems transfer data using a file transfer protocol (FTP) to a central server every 15 minutes, as illustrated in Figure 1.

UDOT developed data visualization tools that enhance decision making speed, timeliness, accuracy, and confidence. Using ATSPM, UDOT staff prioritize operation and maintenance efforts to improve traffic flow, reduce congestion and emissions, and improve safety. The UDOT traffic signal operations engineer, Mark Taylor, noted that “We no longer go out to retime signals every three to five years. Now, we do it only when it’s needed, after we’ve looked at performance measurements.” UDOT does not use ATSPM data for real-time decisions but prioritizes work and maintains a traffic signal system that reflects changing traffic demand.

Figure 1. Automated Traffic Signal Performance System Architecture. Source: FHWA, 2018.

“We no longer go out to retime signals every three to five years. Now, we do it only when it’s needed, after we’ve looked at performance measurements.”

- Mark Taylor, Traffic Signal Operations Engineer, UDOT

The agency also makes available a public-facing interface for signal performance analyses (http://udottraffic.utah.gov/atspm/) using near real-time and historical data. Additional ATSPM case studies, benefit cost evaluations, and technical information are available through the Federal Highway Administration (FHWA) Arterial Management Program’s ATSPM site (https://ops.fhwa.dot.gov/arterial_mgmt/performance_measures.htm).

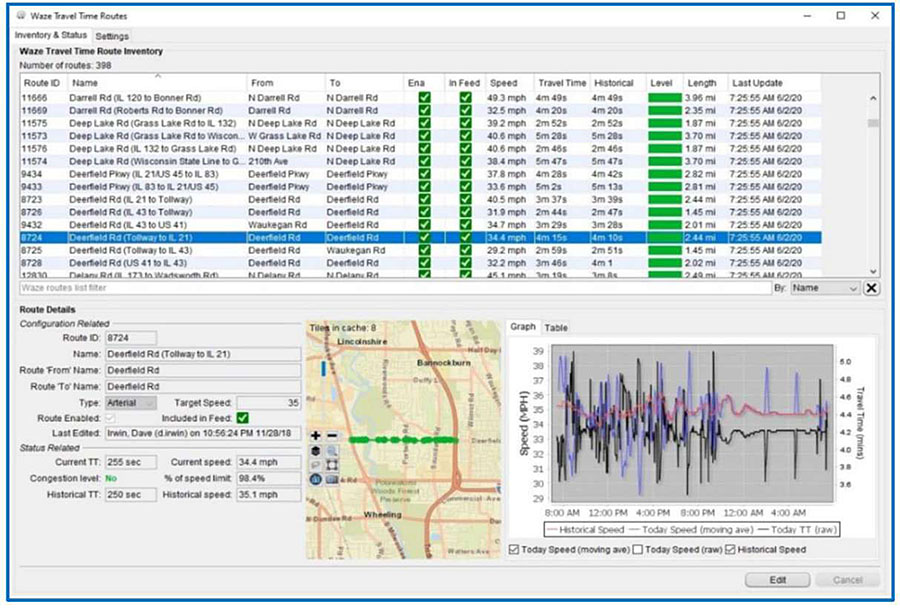

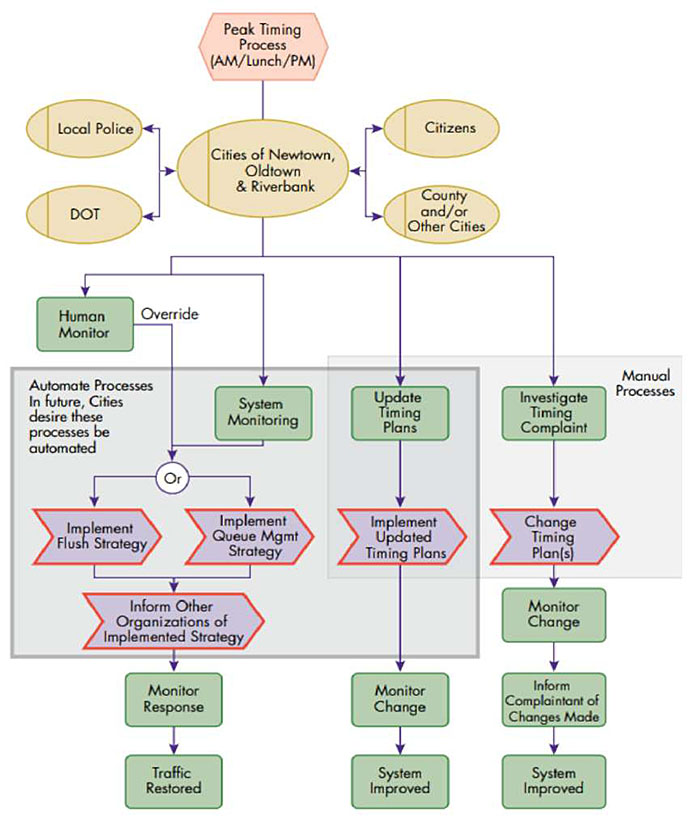

- Incident-Responsive Traffic Signal Systems - By integrating real-time incident and travel time data from a free crowdsourced navigation application partnership with ATSPM data, TMC operators in Lake County, Illinois, can modify signalization to support routing around a roadway temporarily closed due to events such as a roadway crash. The Lake County advanced traffic management system (ATMS) system, called Program for Arterial Signal Synchronization and Travel Guidance (PASSAGE), is the hub for data collection, visualization (Figure 2), signal changes, and broader incident response functions.

Figure 2. Crowdsourced Data Integration with Automated Traffic Signal Performance Measure Data in the Lake County, Illinois PASSAGE Advanced Traffic Management System. Source: Legare, 2020.

- The crowdsourcing partnership is called Waze for Cities as of 2019, and prior to 2019, the partnership was titled the Waze Connected Citizen Program. Lake County uses the Waze data, pulled every two minutes, for real-time decisions such as posting traveler information (frequently referred to as 511 services) through web, DMS, and mobile app platforms. Lake County changes the corridor signalization in near real-time to alter signal progression for detour routing when a major crash reduces roadway capacity. The PASSAGE operators also monitor the performance of the altered signal progression using both Waze and ATSPM data (acquired at a 15-minute interval) and make adjustments as needed.

Lake County uses the Waze incident, travel time, and archived ATSPM data to conduct before-after studies and modify signalization as regional demand changes. The benefit-cost ratio from timing studies using the combination of the free crowdsourced data and ATSPM data, compared to traditional signal coordination and timing (SCAT) studies, ranges from 49:1 for 2015 to 13:1 for 2019. In March 2020, the agency implemented systemwide changes to all agency-owned traffic signals within two days of the state’s Covid-19 mandated travel restrictions taking effect. As of 2020, Lake County is exploring using the Waze travel time data as an input variable for project selection and weather-responsive signal timing. More information on Waze is provided in Section 2.3.2.

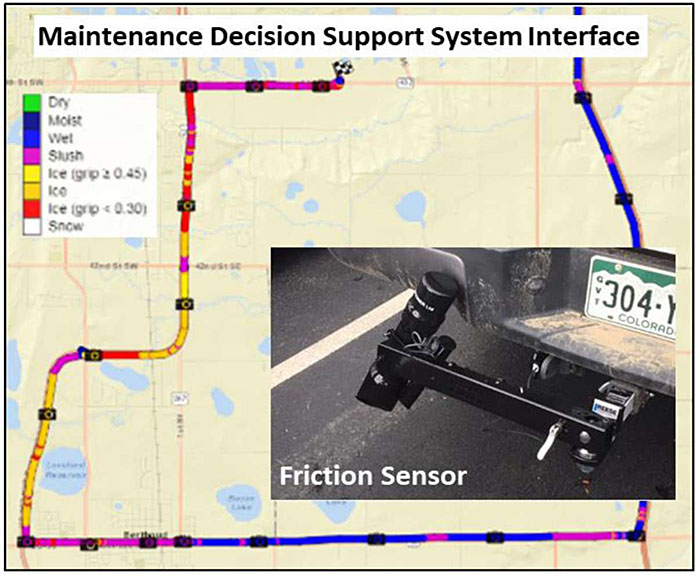

- Friction Sensors Improve Winter Road Operations - The Colorado DOT (CDOT) Division of Highway Maintenance affixed electronic radar devices to the rear of agency trucks to measure roadway temperature, friction, and moisture data, and share the data in real-time with their maintenance decision support system (MDSS). The data from the devices enabled maintenance workers to focus on roadway segments needing treatment rather than treating whole sections of roadways. Figure 3 presents a picture of the radar device as well as data illustrated through a map interface which shows the vehicle route and variations in pavement conditions along the route.

- The improved treatment decisions for a few roadways over three storms saved 21 percent in solid materials use, and 56 percent in liquid materials use, resulting in $180,000 of cost savings, along with significant environmental benefits. Based on these findings, CDOT planned a broader technology deployment and associated changes in treatment decision making.

CDOT plans to implement sensors along corridors with variable weather, where ice and frost conditions are more sporadic, and where making materials use decisions is more complicated. The agency intends to share processed information with Colorado travelers through the agency’s traveler information website, COtrip. During storm events, more than one million visits to the COtrip site may view real-time roadway condition ratings based on the friction sensor data.

The remainder of this module is organized across five sections. Section 2 of this module describes data sources, the types of data generated by each source, and usability considerations. Data are organized into the following five groups: traditional and newer ITS infrastructure data, traffic operations data, crowdsourced data, transportation adjacent data, and emerging big data and sensor technologies.

Figure 3. Colorado DOT Uses Real-Time Friction Sensor Data to Guide Operational Decisions Related to Road Segment Treatment Locations and Types. Source: adapted from Reilly, 2018.

Section 3 introduces the concept of performance management along with the analytical techniques to transform data and inform decisions. The use of data—absent the context of a mission or goal—is like navigating a raft through rapids with each person paddling powerfully and faster but in no unified direction. A performance management framework ensures that the various programs within a transportation agency are “paddling efficiently towards a destination.” Section 3.1 introduces the FHWA transportation performance management framework.

The availability of richer, more granular, and more varied data is complemented by readily accessible analytical techniques. From classification analysis and text mining to video and image analysis, existing software and visualization tools support descriptive and predictive uses. These varied types of analytics are highlighted in Section 3.2. The application of any analytical capabilities should first consider the underlying nature of the data. Thus, Section 3.3 presents differences between traditional, real-time, and advanced analytics in the context of understanding data quality to avoid the ‘garbage in, garbage out’ paradigm.

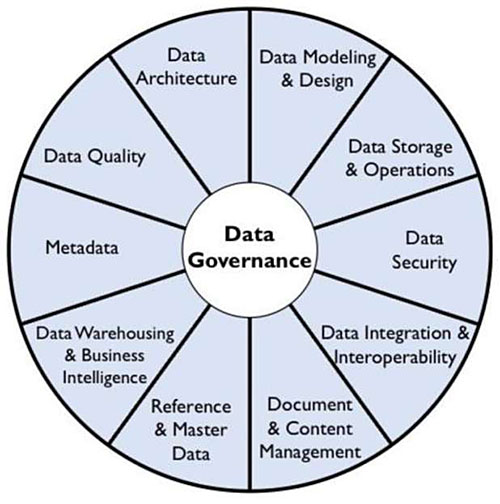

Section 4 introduces key concepts and trends in modern data management and data governance. Data governance is the exercise of authority, control, and shared decision making over data asset management. Traditionally, an authority at the state IT level or at an IT within a DOT will prescribe data governance policies. In some instances, intelligent transportation system (ITS) groups may exercise greater autonomy in how their data are managed, secured, accessed, stored, and more. This is the focus for Section 4.1.

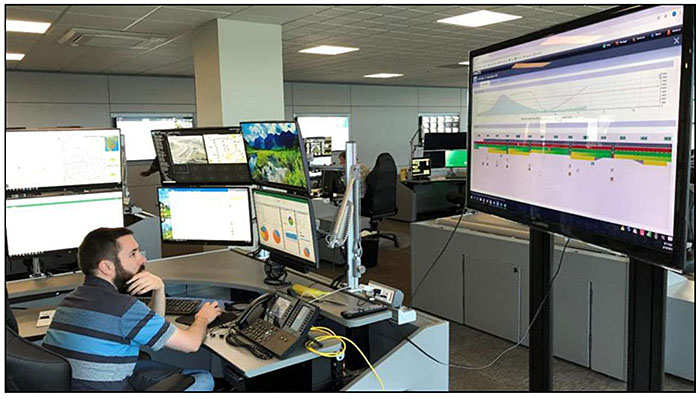

There is a significant shift from centralized data management to a democratization of data. Rather than waiting for formulaic annual or quarterly reports, best practices in data management allow individuals to access and generate analyses as and when needed using real-time and near real-time data. This analytics-on-demand will help improve decision making at all levels of an organization. The democratization of data is highlighted in Section 4.2.

This democratization can only be fully realized with the practice of modern data management principles. Data management, which began for ITS with SQL or other structured databases, storing loop detector aggregated speed and flow data, now requires far more flexibility and access. Section 4.3 introduces the principles of modern data management, while Section 4.4 highlights the shift toward proactive analytics for data management. Section 4 summarizes and excerpts content from the National Cooperative Highway Research Program (NCHRP) Report 952, Framework for Managing Data from Emerging Transportation Technologies to Support Decision Making.

The three examples presented in this introduction illustrate how data can be collected, analyzed, and applied to inform operational decisions, help an agency improve planning for operations, and support project prioritization. Section 5 of this module provides additional examples of ITS and related data applied to improve decisions at the enterprise, program, and front-line levels of operations. The module closes with Section 6, summarizing the growing opportunities and challenges in the use of ITS and related data for transportation agency decision making.

Return to top ↑

2. Data Sources and Types

The data landscape for transportation systems management and operations is evolving from where the public transportation agency generates and controls data to where data are generated and controlled by entities outside the transportation agency. ITS ePrimer Module 9 provides an overview of vehicle detection, monitoring, and tracking technologies. This section describes current and emerging sources of data as of 2020, many of which overlap with and extend Module 9. The section focuses on the types of data from various sources through the lens of broader uses in decision making. Data sources are organized into the following five groups:

- Traditional and newer ITS infrastructure data

- Traffic operations data

- Crowdsourced data

- Transportation adjacent data

- Emerging big data and sensor technologies

The NCHRP report 904, Leveraging Big Data to Improve Traffic Incident Management, assesses transportation and non-transportation data across multiple criteria, including data structure, size, storage/management, accessibility, sensitivity, openness, and costs. This NCHRP resource provides insights specific to data and data management.

2.1 Traditional and Newer ITS Infrastructure Data

Data from ITS infrastructure are typically under the ownership and control of transportation agencies, although potentially managed using vendor products. In instances of vendor-managed data, local or state agencies have at times encountered difficulty changing suppliers and systems, due to scale, compatibility, or learning effects. Agencies may also find it difficult to align data from a previous vendor with a new data provider.

Much of the data are structured, fitting well in a relational database, except for digital video data (e.g., closed circuit television or automated license plate readers). These systems require roadside (either pavement embedded, above the road, or along the side of the road) technology installation and maintenance. They also require information communication from the roadside to a centralized data processing and analysis capability, typically through fiber or cellular services.

2.1.1 Point Traffic Sensor Data

Traditional ITS infrastructure sensors include loop detectors, magnetic sensors and detectors, video image processors, microwave radar sensors, laser radars, passive infrared and acoustic array sensors, ultrasonic sensors, plus combinations of sensor technologies. The third edition of the FHWA Traffic Detector Handbook, published in 2006, details these signalized intersection and freeway sensors technologies, along with their application, design, installation, and maintenance. Since this publication, advances in sensors, and particular over-roadway sensors, support the collection of traffic flow parameters by lane and direction. Sensor applications for arterial roadways are outlined in the FHWA report, “Arterial Data Collection Technology.” A more comprehensive Traffic Monitoring Guide (2016) “provides the most up to date guidance to state highway agencies in the policies, standards, procedures, and equipment typically used in a traffic monitoring program.”

Some detectors directly measure vehicle passage or presence, while others infer traffic flow and speed based on algorithmic data interpretation. This suite of sensors typically generates data including date, time, sensor identity, roadway location, direction, speed, volume, occupancy, and vehicle classification or weight.

- Sensor data is typically collected at sampling intervals (15, 30, or 60 seconds) and aggregated at one, five, fifteen, or even sixty-minute intervals.

- Sensor data, typically stored in a relational database, can be gigabytes or even terabytes in size depending on the entity (state, region, local) and aggregation level before archiving.

- Sensor data, typically collected and owned by the transportation agency, had commonly been managed on-premise and made available for free electronically, but only in aggregate form. Agencies are now are shifting to vendor-based data management systems that integrate storage and analytics (e.g., Statewide Traffic Analysis and Reporting System (STARS II) or Performance Measurement System (PeMS)).

- The quality of this data is tied to the agency’s ability to detect sensor drift and perform routine and timely recalibration, as well as maintain the precise location of sensors.

For example, the Maryland DOT makes real-time sensor speed data available for over 250 road segments through web feeds (Really Simple Syndication, RSS, or Extensible Markup Language, XML) and at five-minute intervals, but aggregates speed data to three speed ranges. Likewise, the Texas DOT makes volume, vehicle class, speed, and other data available through their STARS II system, but not as a real-time product.

The California DOT PeMS system, an Iteris product, collects data from nearly 40,000 freeway detectors and other traditional and new ITS infrastructure data. The PeMS system clarifies, for example, that it provides 24-hour weight in motion (WIM) data from 110 sites calibrated to +/- 5 percent accuracy. Data, including axle weights and gross weight, axle spacing, speed, vehicle classification, and overall length, are processed using algorithms and made available through a web-based user interface that helps the agency conduct pavement studies and accident rate calculations.

While sensors continue to be the mainstay for monitoring traffic volumes, many agencies are shifting to supplementing infrastructure sensors with vehicle probe data. The iPeMS system, an Iteris product, integrates PeMS with probe data from HERE. Regional Integrated Transportation Information System (RITIS) provides probe data from INRIX. When the sensor data is integrated with probe data through vendors, access and sharing become restricted, and issues of data ownership may limit use—a Utah DOT 2-minute video on iPeMS highlights this change.

2.1.2 Point Environmental Sensor Data

An environmental sensing station (ESS) is the hub for an array of sensors to measure pavement condition (e.g., temperature, dry, wet, ice, freeze point, chemical concentration), wind speed/direction, precipitation/accumulation, air temperature, relative humidity, visibility, water level/flooding, snow depth, subsurface conditions and more. Data from field ESS are processed through Road Weather Information System (RWIS) communications, hardware, and software.

- Many state and local agencies use private vendors to manage and tailor RWIS data through proprietary communications and processing, with data typically stored in a relational database.

- This data’s quality is tied to the agency or vendor’s ability to detect sensor drift and perform routine and timely recalibration. Drift refers to the gradual degradation of the sensor and other components that can make readings offset from the original calibrated state. RWIS providers typically embed some quality checks and more advanced data verification and corrections to counter sensor drift. The FHWA Weather Data Environment (WxDE) calculates nearly a dozen quality control metrics, such as checking for data persistence and the rate of change in the data.

- Data and technology challenges cited in the 2020 Road Weather Management Stakeholder Meeting included software or hardware glitches, RWIS station repair needs, system reliability, and data timeliness, accuracy, and availability.

These data feed strategic and tactical winter weather maintenance decisions and are applied by many state DOTs to non-winter weather events such as rain, flooding, or fog. Agencies also use this data to inform travelers and for variable speed limit (VSL) strategies.

2.1.3 Automated Vehicle Identification (AVI) and Bluetooth

Automated vehicle identification (AVI) and Bluetooth technologies use a network of roadside sensors to estimate travel time between and across sensors. Both systems stitch together location and time data from devices passing in the proximity of the sensor to estimate average travel time, travel speed, and travel patterns among sensors. AVI has the primary purpose of toll payment. AVI readers typically reflect tolling points. Additional readers may be strategically positioned to estimate travel time between shorter segments or paths. Bluetooth detectors track the media access control (MAC) address of drivers’ devices.

- AVI data is typically not shared at the granular level due to the concern for personally identifiable information. Instead, the travel time is shared as an average. Similarly, Bluetooth vendors make available only summary statistics and averages rather than vehicle-level data.

- For both systems, the quality of the travel time estimate is degraded with lower AVI transponder or Bluetooth sensor density along the road network. For example, a 20-minute travel time to reach a second toll reader or Bluetooth sensor, reflects the travel time from 20 minutes ago.

- The frequency of communications from the Bluetooth sensor to the central data processing point may further degrade information timeliness.

AVI and Bluetooth data are often used to monitor real-time system performance and have been used to detect potential incidents along major highways. As toll roads expand across the country, operators turn to smartphone applications for payment and sharing toll rates. Archived analyses of smartphone data can provide better congestion pricing toll models; however, most congestion pricing models are proprietary.

2.1.4 Automatic Vehicle Location (AVL) Based Sensors

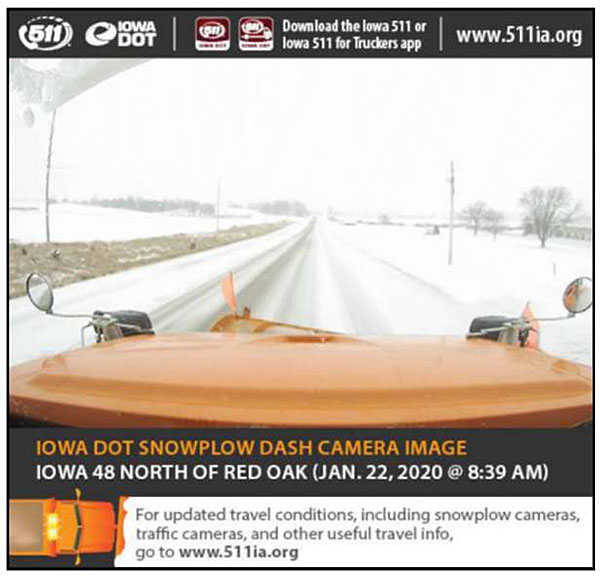

Automatic vehicle location (AVL) tracks a vehicle (e.g., bus, snowplows, safety service patrol vehicles) typically using global positioning system (GPS) technology and a wireless data communications system. Data generated typically includes vehicle, operator, route, event (e.g., arrival time), and geospatial location data. In addition to the position data, agencies collect additional application-specific data from the onboard vehicle and value-added sensor systems. For example, for transit vehicles, fare collection, ridership, speed profile, stop arrival and departure times, or signal priority data may be coupled with AVL. Data such as pavement temperature, air temperature, materials application rate and type, engine diagnostics, or even dashcam images may be captured and transmitted from road weather maintenance vehicles, as illustrated in Figure 4. These value-add sensors reflect the IoE strategy and computing on the edge, where sensors are embedded on physical objects, often with the sensor collecting, filtering, analyzing, aggregating, and transmitting select performance data to save bandwidth, and provide real-time information.

Figure 4. Iowa DOT Snowplow Dash Camera Image. Source: Iowa DOT.

- Depending on the application, AVL data may be captured for real-time and/or archived use at intervals of milliseconds, a few seconds to a few minutes.

- AVL data is typically stored using vendor-hosted cloud-based solutions given the size of this data; some agencies archive the processed data using a GIS database.

- AVL data quality issues do not typically reside with the AVL position data, except when a street is densely populated by tall buildings on both sides. This can cause the GPS signal at the ground level to be degraded. Likewise, in rural and mountainous areas that lack adequate cellular coverage (data gap), GPS quality is an issue.

- Agencies using AVL often note challenges with IoE value-add sensor installation and calibration, and data compatibility and retrieval issues. For example, MDSS may experience challenges with the imprecision of data, such as material application rates.

These data can be used immediately for daily operations such as predicting and sharing a bus arrival time or providing AVL breadcrumb data publicly available through 511 to show both snowplow location and even dashcam images of road weather conditions. These data support real-time operations and maintenance decision support. Archived AVL and accompanying sensor data can be applied to improve routing and schedule adherence. These data can be integrated with broader transportation data to make policy and planning decisions based on performance trends.

2.1.5 Traffic Digital Video

Close-circuit television cameras (CCTV) use optical sensors to derive traffic intelligence from the video. CCTV systems are a central component of TMCs and responder communities. Video data can capture personally identifiable information (PII) and exceed terabytes of data per day; thus, the fixed CCTV video is generally not stored, or stored for a very short duration (e.g., two weeks). The CCTV video is monitored by operators and often shared in real-time with travelers and responder communities.

Several agencies mount CCTV on safety service patrol vehicles with pan, tilt, zoom, and video streaming capabilities. For example, the Maryland and Washington State DOTs use cellular services and AVL/GPS data to transmit CCTV video from their safety service patrol vehicle fleet at the scene of an incident. Some agencies also make available to responders (e.g., law enforcement, fire, or emergency medical services (EMS)) a platform for sharing high-resolution video feeds, different from the lower-quality public video feeds. These feeds help responders conduct a camera tour of the site in advance of the response to understand better the situation, approach direction, and the potential need for additional resources. These are examples of data-sharing without data archiving.

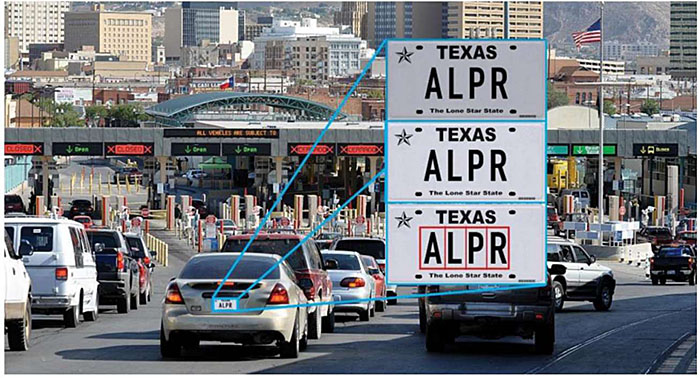

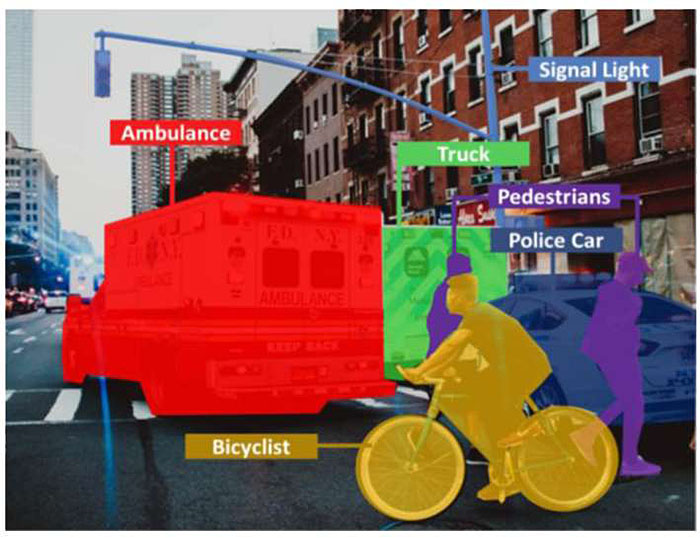

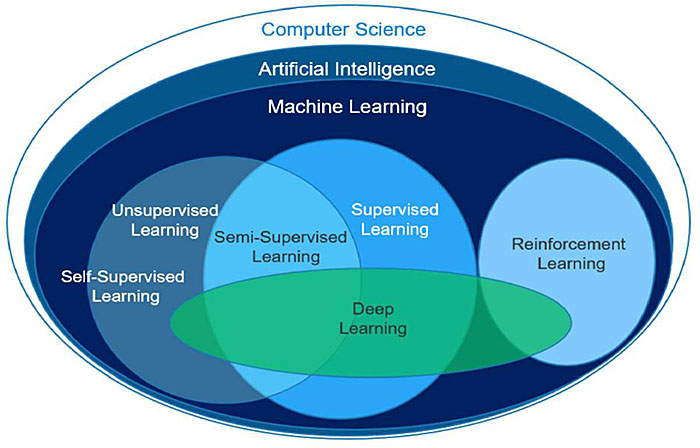

Video can be processed using image analysis for real-time decision making and to archive data for downstream analyses. For example, video can be analyzed to detect and alert operators of potential incidents, wrong-way driving, slow speeds/congestion, or pedestrian on the roadway. Image analyses can also collect data such as vehicle counts (parking or moving traffic), turning movements, vehicle types (truck, car, bicycle, pedestrian), and more. The field of image analysis is an area of significant growth using artificial intelligence, and more specifically, deep learning, further discussed in Section 3. The earliest example of image processing is through automatic license plate reader (ALPR) systems, as illustrated in Figure 5. These systems match images at a downstream sensor to estimate travel time and travel routes. Bluetooth and other lower-cost technologies supplant ALPR use, but ALPR continues to be used for law enforcement (e.g., stolen vehicle detection or toll violation), parking counts, and parking enforcement., An emerging complement to CCTV are unmanned aircraft systems (UAS), often called drones. This technology is introduced in Section 2.5.2.

2.1.6 Traffic Signal Data

Traffic system maturity and connectivity vary significantly, and with it are variations in the quantity, quality, and frequency of data to support all levels of decision making. In the absence of smart signals, traffic engineers use point sensors such as pneumatic tubes to measure traffic volume and estimate turning movements as needed, perhaps every 3 to 5 years. Data from these sources are typically aggregated at 15-minute or hourly counts for a few days. Engineers also use travel time data from vehicles called floating car studies, traversing corridors typically before and after signal improvements to measure changes in travel time and stop time at signals. These older data types are often stored in spreadsheets, potentially on an individual laptop rather than a server that can be accessed by broader users. An intermediary with traditional (non-adaptive) signal systems uses detectors placed a few hundred feet in advance of the signal, typically for demand-responsive timing, which also captures traffic volume data. The volume data may be stored and retrieved from the traffic signal controller.

Figure 5. License Plate Reader Data Reduction Process Illustration. Source: Zmud, 2016.

More than a dozen adaptive traffic control systems (ATCS), most notably Split Cycle Offset Optimization Technique (SCOOT) and Sydney Coordinated Adaptive Traffic System (SCATS), collected traffic demand data in real-time to adjust traffic signal timing to optimize traffic flow in coordinated traffic signal systems from 2004 through 2010. These signals use multiple point sensors to improve through and turning movements for an intersection and across a set of intersections. ATCS often applies traffic models to identify signal strategies. ATCS requires communications and can be integrated with the agency’s ATMS. There are numerous vendors for adaptive traffic control systems, and data typically resides within the system. Figure 6 illustrates a conceptual high-level flow diagram for adaptive signal control with network characteristics, types of adaptive operations, and system interfaces.

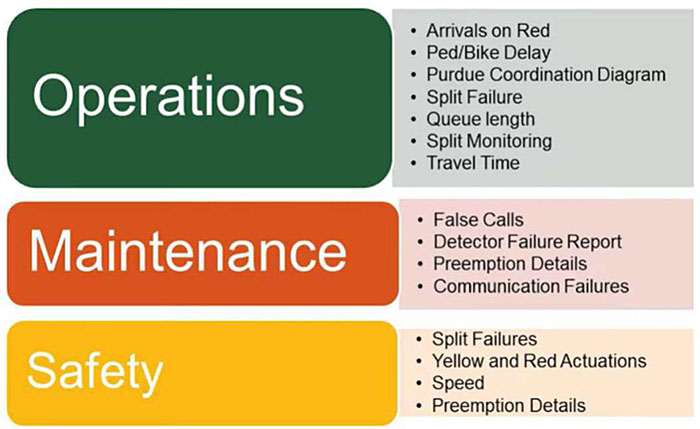

Through collaboration between FHWA, AASHTO, state and city DOTs, the ATSPM systems technology emerged between 2012-2016, generating high-resolution data-logging and analysis capability to existing traffic signal infrastructure. A 2014 report by Purdue University and the Indiana DOT defined the portfolio of hundreds of discrete signal events captured from the signal controller and connected sensors to support system operations, maintenance, and safety.

With ATSPM, signal systems shift from a cyclical (3-5 years), citizen complaint-based, or emergency-based management to performance-based and preventative management. Figure 7 identifies the various hardware, sensors, communications, and software needed to deliver dashboards and analyses that drive operator decisions while Figure 8 summarizes many of the high-value performance measures from ATSPM.,

Figure 6. Adaptive Traffic System Conceptual Architecture.

Source: FHWA32.

The open-source code from Utah DOT or other vendor systems takes raw data files (1/10th second controller data logs) and aggregates the data to 15-minute bins through postprocessing using a set of metrics algorithms. The setup of ATSPM requires detailed intersection and sensor-specific information along with systems characteristics such as data latency correction for specific sensors or speed limits. ATSPM is not a sensor but rather a system to integrate traffic controllers and other intersection sensor data and make this data available through analytics platforms to support improved systems management decisions. A detailed list of ATSPM resources is available through the FHWA ATSPM site (https://ops.fhwa.dot.gov/arterial_mgmt/performance_measures.htm).

Figure 7. Performance Measure Categories from Automated Traffic Signal Systems. Source: Curtis34

Figure 8. System Requirements for Automated Traffic Signal Performance Measures. Source: Gotfredson35

2.2 Traffic Operations Data

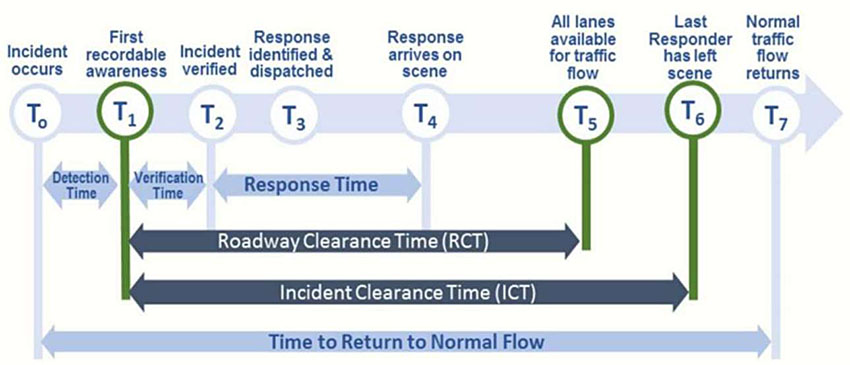

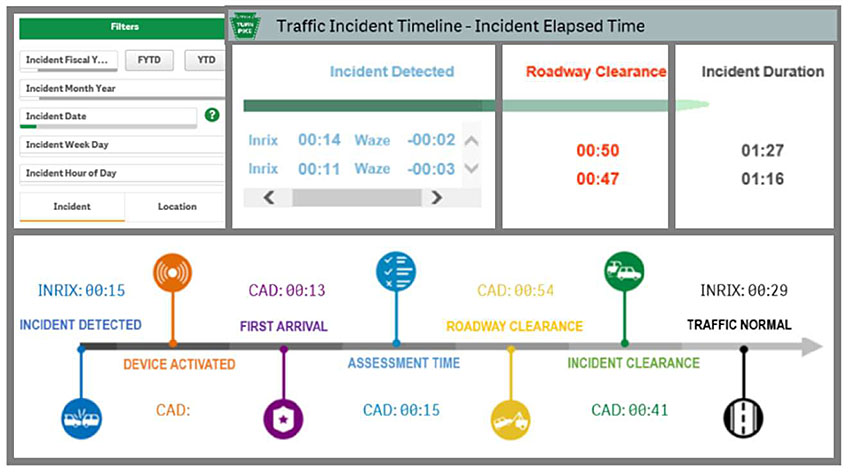

Transportation management centers operated by state and local DOTs generate data related to the operations of the transportation system. For example, the TMC operator will input data specific to incidents, including location, lanes blocked and duration, response deployed, and potentially other data specific to an incident timeline, as illustrated in Figure 9. These data are typically stored within the ATMS with manual/observational entry elements and automated data generation. Most agencies collect T1 and T5, but few collect all seven timeline elements, particularly in locations with limited sensor availability, such as CCTV. Some ATMS also enable operators to enter a checkbox for secondary crashes. With maturing ATMS systems with video analytics and AVL-enhanced vehicles, many of the timeline components may be passively recorded with far greater consistency, accuracy, and precision.

Transportation management center operators may manually log data safety service patrol (SSP) response data (e.g., number, location, and duration of incident response by type of response). Often the SSP will log this data through a mobile in-vehicle platform or paper logs. Data specific to the location and route may be collected from the SSP AVL or may only be available as a static planned log of routes and frequencies by time of day and facility.

Figure 9. National Traffic Incident Management (TIM) Performance Measures. Source: FHWA36.

Many other operations decision data such as variable message signs postings of travel times, advisories, or variable speed limits are typically automatically logged within the ATMS. The TMC may also log activation and duration for dynamic ramp metering rates, dynamic part-time shoulder use, and other active traffic and demand management strategies. These structured, semi-structured, and often geocoded data support the refinement of operational strategies for traffic and demand management. The quality, completeness, and consistency of these data vary as a function of whether data are manually entered by operators or automated through time stamps of operator actions.

2.3 Crowdsourced data

Broadly defined, crowdsourcing is “the practice of addressing a need or problem by enlisting the services of a large number of people via technologies.” FHWA Every Day Counts Round Five (EDC-5) identifies transportation crowdsourced data as data collected through vehicle probe vendors (e.g., INRIX, HERE, TomTom, Verizon, Google, Street Light Data), mobile applications (apps) data (e.g., Waze, state 511 apps, 311 apps), and social media data (e.g., Twitter, Facebook).

- Data from most of these sources, apart from agency-owned apps, are typically not under the ownership and control of transportation agencies. Often data are black-box products with limited provenance (unclear what sources generate the data) and have limitations in use and sharing.

- Some data are actively submitted by travelers, while the preponderance of data is passively collected from travelers.

- Many of these data are unstructured, textual in nature, and non-standard, varying from one to the next data feed.

Common types of data generated through crowdsourcing include speed, travel time, events (e.g., crashes, stalled vehicle, flooding), infrastructure state (e.g., signal outage, potholes), travel behavior (route and mode choice), sentiment data (e.g., perception of agency or road performance). The following subsections provide details specific to vehicle probe, mobile application, and social media data.

2.3.1 Vehicle Probe Data

Vehicle probe vendors have been aggregating data using fleet and driver navigational GPS, cellular geolocation, and state DOT sensors for over a decade to provide real-time link speed data and archived origin-destination and path data. As of 2020, this data type does not offer traffic volume estimates. Vehicle probe data vendors now use connected vehicle data and provide analytics packages, transforming the data to support real-time decision making through alerts and performance measures. For example, INRIX announced in 2020 an intersection analytics platform that captures stop, go, and turning movements based on granular data from connected cars.

Agencies complement vehicle probe data with ITS infrastructure sensor data for more precise travel time and speed estimation on freeway and arterials roadways. Agencies are beginning to retire ITS infrastructure sensors between interchanges in favor of vehicle probe data while keeping the ITS sensors at interchanges to capture traffic volume and vehicle type data.

Most real-time vendors offer data on traffic message channel roadways segmented, a vendor-independent standard, and subsegment based on vendor-specific standards. For example, INRIX provides INRIX XD segments, dynamically defined with data granularity to a tenth of a mile. Data fields vary by vendor and vendor product. They typically include reference speed (speed limit), current speed, historical average speed, travel time, congestion level, segment identification, and data confidence or quality metric. Vendors also provide graphical representations of road speed parameters, called traffic tiles, used as 511 web or mobile map overlays. Data are transmitted through a web service application programming interface (API), often using Hypertext Transport Protocol (HTTP) through XML or JavaScript Object Notation (JSON) format. HERE, INRIX, and TomTom interface guides available through The Eastern Transportation Coalition (TET Coalition) offer detailed information on road network architecture, data and map specifications, and API calls. The following are general characteristics specific to vehicle probe data:

- Most real-time vehicle probe data are transmitted at a one to two-minute frequency.

- The vendor agreement specifies the terms, conditions, and limitations for the use of their data. These include, for example, who may access the data and for what uses.

- The quality and coverage of vehicle probe data have improved significantly and continues to improve, particularly for arterial roadways. Data users should pay attention to data transmission settings that may embed historical data where real-time data are unavailable. As such, transmission settings may produce data that dramatically misestimates current traffic conditions. Since 2009, the TET Coalition has been validating vendor data from INRIX, HERE, and TomTom, mainly for freeway road segments using Wi-Fi and/or Bluetooth sensors. The TET Coalition also conducted arterial validation in the years 2015 and 2019. Data validation reports are publicly available (https://tetcoalition.org/projects/transportation-data-marketplace/).

- Some agencies validate vehicle probe data using their point infrastructure data. The validation of these data in one region or corridor may not reflect similar accuracies in another region due to the variances in traveler or fleet use of specific smartphone or navigation devices.

- Many agencies use analytics services (e.g., RITIS and iPeMS) to ingest and process vehicle probe data. A few agencies have developed their analytics platforms in-house or through consultant support (e.g., Indiana DOT Traffic Ticker and Delta Speed tools) with support from local universities. These analytics applications are described in Section 4, decision making examples.

- The cost of these data varies by the size of the road network for which data is required, vendor, and level of data sharing, often exceeding a few hundred thousand dollars annually. Moreover, due to network segmentation differences, shifting from one vendor to another is a significant undertaking.

State and metropolitan planning organizations (MPOs) can also access archived probe data on the National Highway System (NHS) from FHWA through the National Performance Management Research Data Set (NPMRDS). This data is made available through RITIS and is based on data from INRIX as of 2017, previously provided by HERE.

Archived data vendors that provide origin-destination and path data also used anonymized location records from smartphones and navigation devices in connected cars and trucks. INRIX Trips and StreetLight Data are two vendors offering this type of data. Analytics also offered by these vendors can provide data such as annual average traffic counts, demand and routes between zones, modal use, turning movements, and other metrics to support transportation planning. As with real-time probe data, validation of these data in one region or corridor may not reflect similar accuracies in another region due to the variances in travelers’ use of specific smartphone service providers or navigation devices.

2.3.2 Mobile Applications Data

The most prevalent source of free mobile application data is through the Waze for Cities partnership (formerly Waze Connected Citizen Program), which uses active and passive data collection. Many state, county, or city agencies also collect data using 511, 311, and other apps, mainly through active reporting by travelers that use their app. These mobile applications generated data are described here.

Waze for Cities derives data from its navigation app users, who actively report traffic and event data, and passively share GPS location data. Hundreds of towns, cities, counties, and states are Waze for Cities partners and access the open-source data. Waze for Cities partners can visually observe data through Waze’s traffic viewer tool and can acquire jams and alerts data every two minutes using XML or JSON formats through an API.

Many agencies now make available Waze reports as a layer within their 511 platforms. For some agencies, data attribution may present a partnering hurdle with Waze. As of 2020, the Waze preference is to only attribute live data when used in the agency’s traveler information system, with requirements to remove Waze attribution as the data becomes old and is used through analyses of archived data. Data from Waze is geospatially tagged and includes timestamp, latitude/longitude location, average speed, jam length, road type, reliability score (16), and confidence score (0-10) data. Alert data also includes a jam category and accident, road closed, and weather hazard categories, each with the following subcategories:

- Accident refers to crashes and is categorized as a major, minor, or unspecified type. These data are based on reports from Waze users with inherent imprecision.

- Jam refers to the level of traffic, with standstill, heavy, moderate, and light subtypes. There is no clarification available between these subtype definitions and the GPS-based travel time Waze pulls from its users.

- Road closed subtypes include construction (typically sourced from the DOT, occasionally input by Waze users), event, hazard, and unspecified.

- Weather hazard is a “catch-all” category with on-road subtypes (car stopped, construction, ice, lane closed, object, pothole, roadkill, or traffic light fault), shoulder subtypes (car stopped, missing sign, animal, or unspecified), and weather subtypes (flood, fog, hail, heavy snow, and many others). These data are generally entered by Waze users. The construction and road closed are also based at times on data shared by Waze for Cities partners.

| Type of Event |

Matching |

| % DOT Matched to Waze |

Average Time that a Waze Event was Reported Before a DOT Event |

| Freeways/Ramps Crashes |

40% |

3 Minutes |

| Primary/Secondary Crashes |

12% |

3 Minutes |

| Freeways/Ramps Disabled Vehicles |

37% |

14 Minutes |

| Primary/Secondary Disabled Vehicles |

4% |

16 Minutes |

Figure 10. Waze Crash Matching for Three State Transportation Agencies. Source: University of Maryland Center for Advanced Transportation Technology.

Validation is one challenge with Waze alert data. For example, travelers may report events based on their perception when approaching an event. A report of a minor crash may actually be a stalled vehicle or vice versa. The Virginia Transportation Research Council (VTRC) compared Waze crash data along a 3-mile urban freeway with CCTV data. Based on the analyses by VTRC, Virginia DOT summarized whether Waze-reported crashes could be confirmed using CCTV data, noting that Waze first reported the incident correctly on 38 percent of instances. A 2018 study by the University of Maryland Center for Advanced Transportation Technology compared data for three States to estimate the percentage of Waze reports that matched transportation data and the average time Waze reports preceded the transportation agencies’ awareness of a roadway crash and disabled vehicles, as illustrated in Figure 10.50

That said, states and local agencies find tremendous value from Waze data for situational awareness, quicker incident detection, and the ability to detect many more incidents than their traditional freeway-centric sensor infrastructure. Each agency varies in how data from Waze is shared, with some posting all crash data without verification, and others only posting data after verification.

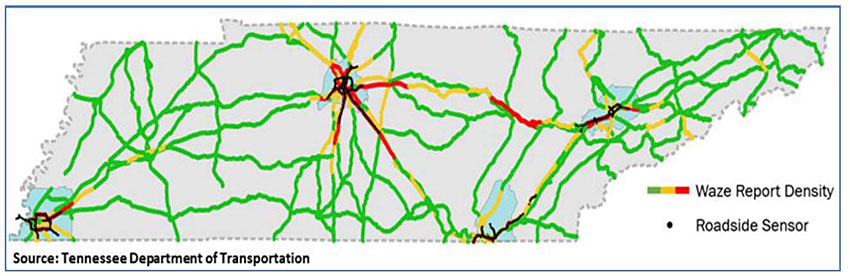

The Iowa DOT observed that information from Waze first alerted TMC operators of an incident 23 percent of the time while traditional methods (CCTV, safety service patrol, or 911 calls) first alerted TMC operators of an incident 77 percent of the time. Tennessee DOT uses Waze speed, incident, and jams data to enhance and expand queue detection techniques that previously used roadway detector equipment. Using Waze data, the agency detected crashes, stopped vehicles, and queuing more quickly over greater geography, as shown in Figure 11.

Waze for Cities partners can also specify road segments using the traffic viewer tool and pull travel time data from this tool at two-minute intervals. This data is based on passively collected GPS data from Waze users and other data procured by Waze. Lake County, Illinois, and Rhode Island DOT are two agencies that use the free Waze travel time data rather than vendor probe data. Waze for Cities partners can also designate certain Waze users as “municipal users” and track their vehicle fleet-generated data use Waze’s Traffic Viewer tool.

Figure 11. Tennessee DOT Waze Report Density versus ITS Roadside Sensor Coverage.

Waze also sources road closure data from its Waze for Cities partners. The process for sharing this information is evolving. A unique relationship growing among Waze for Cities partners is with their region’s Waze Map Editors, a voluntary group of individuals that Waze geographically sources to update Waze maps. Improving the relationship with the volunteer map editors enables agencies to change Waze road networks more quickly, and consequently, Waze routing algorithms. A few agencies have been fortunate to find that a DOT employee is a Waze map editor (e.g., Maryland DOT and Pennsylvania Turnpike), which streamlines network responsiveness to road closures.

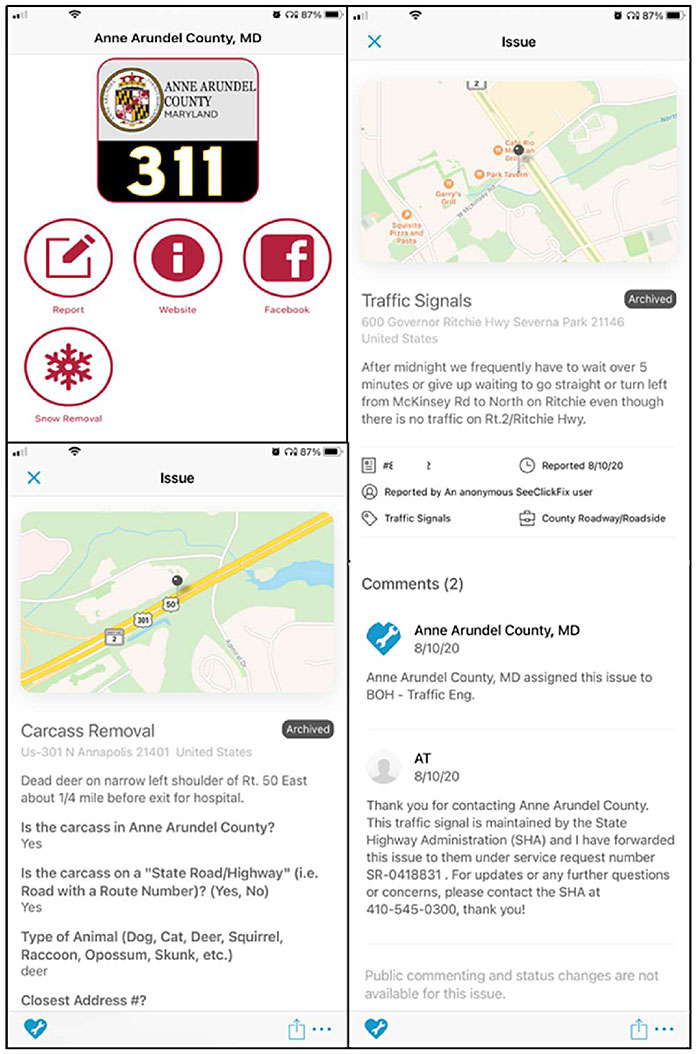

311, 511, and Other Apps can collect data from travelers to provide traffic operators with more timely information on the state of the road system and expedite transportation infrastructure maintenance. In 1997, the Federal Communications Commission designated 311 as the phone number for non-emergency local government services. Since then, 311 has been integrated with web and mobile platforms using customer relationship management (CRM) software that enables citizens to report and geotag signal outages, missing signs, roadway debris, potholes, and other transportation as well as non-transportation issues.

Some cities, counties, and states develop their own 311 apps, but most rely on vendors for 311 app development. Hundreds of agencies use the vendor platforms such as SeeClickFix or PublicStuff, customized to their agency., Illustrated in Figure 12 is the Anne Arundel County, Maryland 311 app, which uses the SeeClickFix platform branded to their County. Illustrated in this figure are two posted reports related to carcass removal and signal timing. These apps more broadly field citizen reports associated with housing, trash collection, zoning ordinance violations, and other issues to be addressed by the governmental agency.

Figure 12. Anne Arundel County, Maryland 311 App. Source: Anne Arundel County, Maryland Mobile App powered by SeeClickFix.

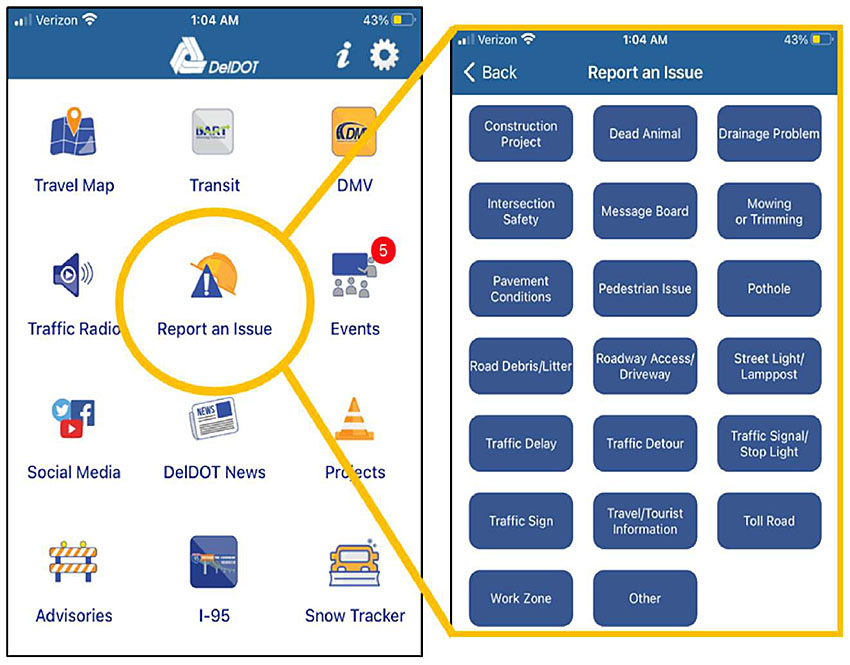

Traditionally, 511 systems offered phone and web-based travel information. Many states and cities expanded their 511 to a mobile platform between 2010 and 2015 (e.g., New York State DOT in 2011, Colorado DOT in 2012). A few agencies now enable 511 app users to report incident information on the app platform. The data is typically semi-structured or unstructured and frequently not integrated with an agency’s ATMS. The user reports are channeled to the agency’s CRM system. For example, Delaware DOT’s 511 app allows users to report over 20 road-related issues, as illustrated in Figure 13. The app enables the user to define the location, share a photo, and provide detailed text on the incident situation. The app also allows the user to provide a contact phone or email for a follow-up response. The app requests that more immediate issues be reported by phone to the TMC and provides the phone number.

Like the Delaware DOT 511 app, the Virginia DOT’s 511 app provides a link to a web-based issue-reporting CRM platform (vdot.virginia.gov/travel/511.asp) with image and geotag capability and a phone option for reporting the more immediate transportation-related issue.

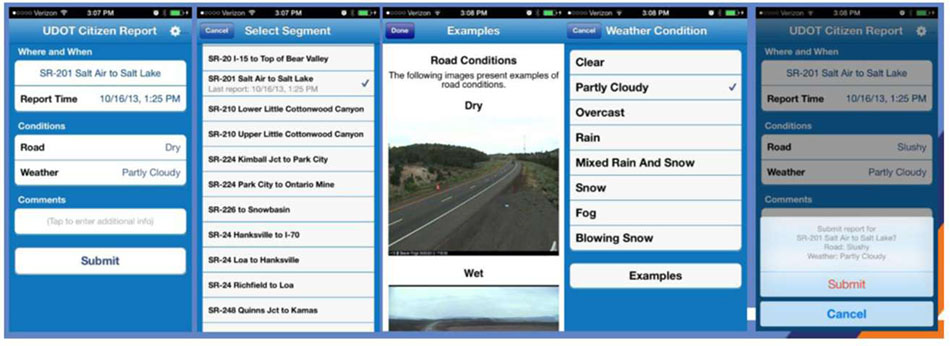

Some state and local agencies have developed apps that focus on a specific need. Utah DOT’s Citizen Reporting Program works to enlist and train volunteers to use an app to consistently report weather and pavement condition-related issues along mountainous and rural terrain. The training module ensures consistency between users in interpreting various pavement and weather conditions. The training and reporting interfaces are illustrated in Figure 14. Incoming data from the app is integrated with road weather information systems, meteorological, and other data to send out information to travelers through the DOT’s 511site and Twitter feed.

Figure 13. Delaware DOT 511 App Supports User Reporting of Issues. Source: Delaware DOT.

Figure 14. Screenshots of the Utah DOT Citizen Reporter App. Source: Utah DOT.

2.3.3 Social Media Data

Most state and local agencies use Twitter, Facebook, or other platforms to share information with travelers. Agencies differ in their use of social media; some only broadcast information, others engage in two-way communications by monitoring and responding to social media messages. Agencies’ social media broadcasts typically include videos, photos, links, and text information. The information broadcasted typically reflects public safety announcements (e.g., work zone awareness, seat belt use, and Move Over campaigns), road impacting alerts (e.g., blizzard warning, crash, or road closures), new or improved initiatives or programs, or calls for public input (e.g., long-range planning, public engagement, or reporting issues).

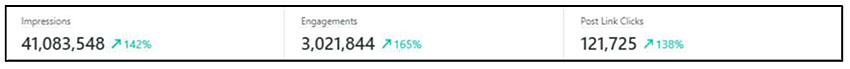

Agencies typically have dedicated staff within the communications office to broadcast content. They may use public relations software for media monitoring and business intelligence (e.g., Meltwater, Crison, Hootsuite, or Sprinklr), for example, to assess sentiment towards a new agency initiative. For example, Iowa DOT uses Meltwater to review data related to audience demographics (age, gender) and engagement statistics for Twitter (e.g., likes, retweets, post link clicks) and Facebook (impressions, engagement, and link clicks). From May 2019 to May 2020, their Facebook page outreach improved across three metrics, as illustrated in Figure 15.

Figure 15. Iowa DOT Facebook Performance for Nine Pages with Operations Content. Source: Iowa DOT, 2020.

Some communications offices also engage with the public in creative ways. For example, Texas DOT held a digital sign contest asking their social media followers to submit a creative safe-driving message and vote among ten finalist messages, with winners’ messages to be posted on state DMS (Figure 16).

Specific to operations, many agencies use a Twitter handle with “511,” linked to traveler information. For example, 511SF Bay, the San Francisco Bay area traffic Twitter feed, uses this platform to share broader traffic, transit, rideshare, bicycling, and parking information. State DOTs, such as Ohio and Iowa, often provide district or region-specific 511 Twitter and Facebook feeds. Individuals can subscribe to the feed that reflects their region of travel.

Figure 16. Texas DOT Tweet (Dec 7, 2020).

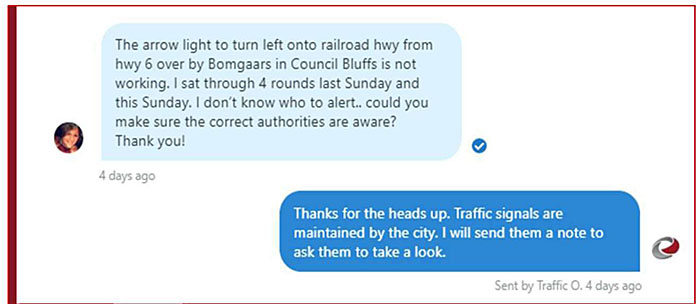

The two-way operations-focused communication is led by the central communications office, the TMC, or as a joint function. When Twitter, or less frequently Facebook, comments communicate an issue or incident, the transportation agency can make operational decisions based on this information. Iowa DOT, for example, has a policy to respond to every message within 15-minutes and to forward a message to the appropriate district or local authority. The monitoring is generally a manual process limited to messages communicated to the agency’s social media feeds. For example, the Iowa DOT maintains and actively monitors both 511 Twitter and Facebook presence at the state and regional levels. Figure 17 illustrates an example of a Twitter exchange related to signal malfunction.

Figure 17. Iowa DOT Responds to 100% Twitter Comments within 15 Minutes. Source: Iowa DOT, 2020.

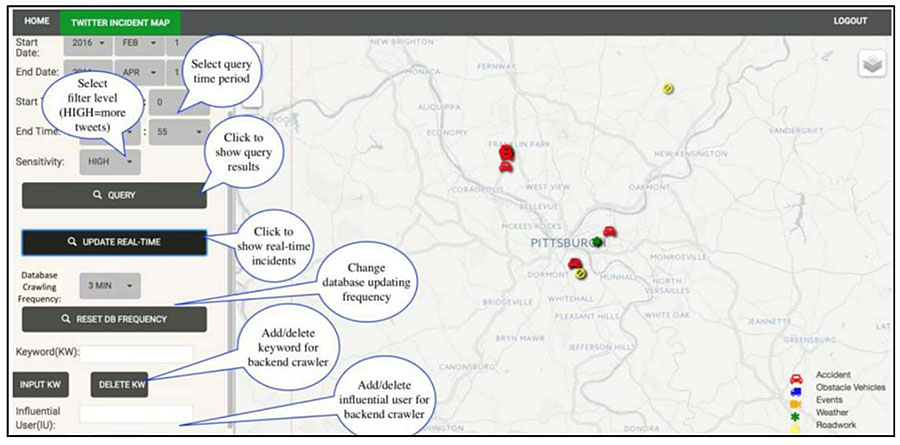

While some efforts have explored the use of advanced analytics to mine social media data for traffic incident detection, the monitoring function as of 2020 remains a manual process. An example of advanced analytics capability was developed for the Pennsylvania DOT by Qian. This prototype, shown in Figure 18, extracted real-time tweets from Twitter using the representational state transfer (REST) API. The capability filters tweets to pull incident information and enables visualizing the tweets on a map. The interface uses the Twitter text message, timestamp data, and tweet location (latitude, longitude) if the user has allowed Twitter to share their location.

Figure 18. Control Panel for Twitter Real-Time and Archived Visualization. Source: Qian, 2016.

2.4 Transportation Adjacent Data

Often data generated by public and private entities outside transportation hold significant value for transportation operations. Examples of transportation-adjacent data include weather data from the National Weather Service, public safety computer-aided dispatch (CAD) data from law enforcement or fire agencies, or law enforcement crash report data from a Department of Motor Vehicles (DMV). Data from planned events such as concerts, professional sports, or festivals also hold significant value for transportation operations. In this section, crash reports, CAD, and weather data types are discussed in greater detail.

2.4.1 State Traffic Records Data

Each state has its own laws and process for how, when, and if a vehicle crash is to be documented through a police report and aggregated to state traffic records. The National Highway Traffic Safety Administration (NHTSA) states that every state should “maintain a traffic records system that supports the data-driven, science-based decision making.” The traffic records system includes the collection, management, and analysis of traffic safety data. These data are useful in planning for traffic incident management and traffic safety. State traffic records data comprise six core data systems: crash, vehicle, driver, roadway, citation and adjudication, and injury surveillance. NCHRP Report 904 provides a summary of each data system element and guidelines applicable for each data system (e.g., Model Minimum Uniform Crash Criteria (MMUCC) for crash data or Model Inventory of Roadway Elements (MIRE) for roadway data). These data in most states use a combination of electronic and paper records; the latter often results in less precise information and months delay in data upload compared to electronic records. Some data are not available due to PII, and data fields and definitions may vary from one state to another. NCHRP Report 904 classifies the level of data readiness for the six data systems across 10 categories.

2.4.2 Computer-Aided Dispatch (CAD) Data

Public safety agencies (law enforcement, fire and rescue, and EMS) use CAD systems to catalog and coordinate activities. Because most road incidents are first detected through 9-1-1 calls, CAD is an invaluable source of real-time incident data for TMC operators. CAD data support incident detection, notification, and response. Data fields include personnel logs on/off time, timestamped incident detection, field personnel assignment, dispatcher actions, and field personnel actions, including free-form text. Because CAD is recorded using an event database, wherein each row is associated with a single action, processing this data requires extraction and comparison of multiple data rows. Specific data to support TMC operations include incident detection and verification times, responder type and arrival times, incident type and severity, lane block and opening times, secondary crash occurrence, and responder scene departure times. Following are considerations for accessing CAD data:

- Agencies are sometimes challenged in ensuring that the right types of incidents (e.g., roadway crashes and not domestic violence) reach the TMC, and that PII is not transmitted to the TMC operator. For instance, the free-form fields could contain potentially sensitive information. State DOTs must adhere to the Criminal Justice Information Services (CJIS) and the Health Insurance Portability and Accountability Act (HIPAA) requirements for handling sensitive CAD data.

- CAD systems vary by vendor and have proprietary formats or other issues preventing the real-time export of data. Systems may require significant data translation efforts. Consequently, state operations may have gaps in accessing CAD data in some regions.

- There is a cost associated with filtering and sharing CAD data. The financial responsibility/uncertainty about maintaining the data feed may prove a challenge.

- The absence of an interagency agreement, data sharing policies, or data security processes may prevent the CAD provider agency from sharing this data.

The FHWA Every Day Counts Round Four initiative, Using Data to Improve TIM, identified nine state agencies that fully integrated CAD with their ATMS systems (Washington, Oregon, Minnesota, Wyoming, Wisconsin, Oklahoma, Florida, West Virginia, and Delaware). Full integration means automated processes bring select data elements from relevant CAD events (transportation-related events) into the state’s TMC software and potentially update events as the incident proceeds. The software will display incident details and map-based alerts to assist operator response. Many other states have lower integration levels where CAD data may be visible through a dedicated computer screen or window but requires manual entry or copy/paste into the TMC software. In nearly a third of the states, the public safety dispatch centers notify TMC operators of incidents through a call or email, often only when assistance is needed.

2.4.3 Weather Data

Beyond the transportation agency RWIS, managers obtain road weather information from many sources. The National Oceanic and Atmospheric Administration (NOAA) provides raw weather data free of charge, but they come with expertise and cost requirements to process, store, and make them usable. The National Weather Service (NWS), a part of NOAA, provides weather, water, and climate data in addition to forecasts and warnings. The Meteorological Assimilation Data Ingest System (MADIS) integrates NOAA and non-NOAA data (including RWIS and Clarus weather telematics) and shares them in real-time. The Federal Aviation Administration, the US Geological Survey, the Department of Agriculture, the Forest Service, and the Environmental Protection Agency also generate weather data useful for specific regions. Private vendors provide high-resolution, route-specific road weather information for transportation managers and operators. Weather data includes the following:

- Atmospheric fields such as air temperature and humidity, visibility, wind speed and direction, precipitation type and rate, cloud cover, tornado or waterspout occurrence, lightning, storm cell location and track, and air quality.

- Water level data include stream, river, lake levels near roads, and tide levels (i.e., hurricane storm surge).

- Pavement fields include pavement temperature, pavement freezing point, pavement condition (e.g., wet, icy, flooded), pavement chemical concentration, and subsurface conditions (e.g., soil temperature).

These data inform proactive and real-time decisions. For example, Missouri DOT planned road closures based on NOAA/NWS river gauge and hydrograph predictions.

2.5 Emerging Big Data and Sensor Technologies

The availability of low-cost communications and numerous sensors offers new opportunities to collect, analyze, and use data, most promising through connected technologies (vehicles, travelers, and infrastructure), UAS, and other emerging data. These technologies, categorized as emerging as of 2020, are different in terms of volume, variety, velocity, processing, security, and use. Agencies are developing the capacity to access and make use of these data, which hold tremendous potential. This section will highlight the types of data generated from emerging data sources.

2.5.1 Connected Vehicle, Traveler, and Infrastructure Data

Connected vehicles use communication technologies to share data with other vehicles, road infrastructure, and cloud infrastructure without an active driver or passenger input. Automated vehicles, those that have some aspect of control (steering, braking, etc.), are equipped with dozens of sensors (side radar, sonar, GPS, Light Detection and Ranging (LIDAR), camera, engine diagnostics) and microprocessors to store and transmit data. A connected traveler is one who uses a mobile device generating and transmitting status data, including the traveler’s location, trip characteristics (e.g., speed), mode and status (e.g., riding in a car, riding on transit, walking, biking), departure time, and destination choice.

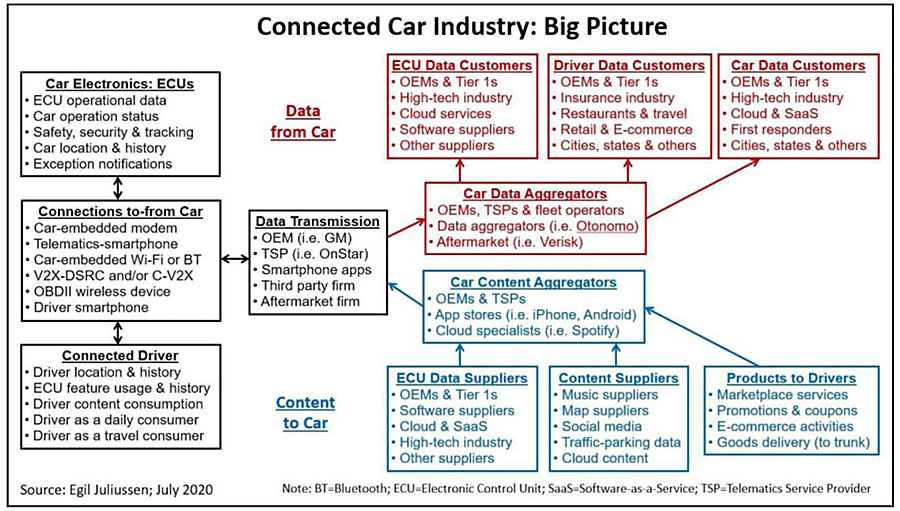

Data from connected and automated vehicles are routinely used in the automotive industry for dealer/maintenance services, infotainment, and location-based services or advertisements. The connected vehicle ecosystem, illustrated in Figure 19, highlights the various data generated from the vehicle and specific data customers.

The USDOT ITS Joint Program Office initiated connected vehicle pilots in 2015, with concept development and testing through 2020 in Wyoming, New York City, and Tampa. These pilots spurred technology development, measured deployment benefits, and addressed technical, institutional, and financial issues. ATSPM is an example of connected infrastructure.

In 2015, Gartner estimated approximately 250 million connected cars globally by 2020, while a 2017 Strategy& PwC report estimated 470 million connected vehicles by 2025. Regardless the projection, multiple vendors process connected vehicle data and offer services to support transportation decision making. For example, Otonomo’s automotive data service platform offers status attributes (door, battery, fuel), traffic data, parking data, and hazard data. Wejo touts 18 million active vehicles, with 52 million accessible vehicles generating 4.8 trillion data points. INRIX and HERE both access and use connected vehicle data to improve their vehicle probe traffic data quality. The volume of data generated by connected vehicles can quickly exceed terabytes per day; thus, for transportation operations and planning, access to connected vehicle data should focus on specific data types and uses.

Figure 19. Connected Car Industry Big Picture. Source: Juliussen, 2020.

Many vendors are emerging with technologies that leverage mobile devices, cloud services, and sensors. For example, iCone offers a suite of products that communicate real-time work zone data through a user interface. The Virginia Tech Transportation Institute is testing real-time threat detection and warning algorithms embedded in wearable devices such as work zone vests in conjunction with connected and automated vehicles. HAAS Alert and Make Way now offer responder-to-vehicle and responder-to-responder alert technologies that automate traveler notifications of approaching responders’ activity using the Waze platform., Each vendor provides performance data associated with alert activations. The market for services that improve traffic operations will continue to grow, and in the process, also bring forth newer, more granular data for refining operations.

2.5.2 Unmanned Aircraft Systems (UAS) Data

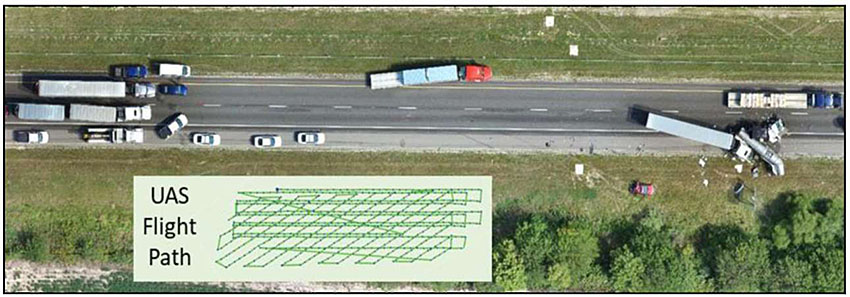

UAS, commonly called drones, can capture high-resolution video as well as other data, based on the suite of sensors embedded with the UAS, to improve operations, construction, inspection, and safety. UAS can transmit data in real-time/in-flight or store data (flash memory card) for transmission at the flight’s conclusion. Multiple software vendors process data for specific application areas. For example, Figure 20 illustrates a composite image based on 107 images collected over three UAS flight paths for a major roadway crash in Tippecanoe, Indiana. The three flights required a total of 69 minutes to map the entire crash scene.

High-value use cases for UAS include asset inspection, law enforcement crash investigation, and situational awareness. The FHWA EDC-5 UAS and the FHWA EDC-6 Next Generation TIM programs provide details and use cases for high-value applications of this technology, such as monitoring of incidents, alternate routes, and queuing. UAS uses are expanding. For example, a 2019 report by the Massachusetts DOT explored the use of UAS to collect traffic data on roadways and estimated UAS and video processing to have a count accuracy of 93 percent and a speed data error rate of 6.6 percent.

Figure 20. Tippecanoe UAS Mosaic Composed from Three Flights. Source: Tippecanoe Sherriff’s Office.

State and local agencies have demonstrated the benefits of UAS for traffic incident management. For example, a Washington State Patrol pilot test in 2018 estimated an 80 percent reduction in road closure time based on 125 UAS deployments, saving $4.2 million in economic impact. The Tippecanoe County Sheriff’s Office in Indiana estimated a 60 percent reduction in scene clearance time for fatal and serious injury crashes using UAS compared to traditional crash scene investigation methods.

- Agencies are still working towards cohesive strategies for the storage, security, privacy, and accessibility of these. A 2018 UAS Peer Exchange noted that “the datasets being stored can vary dramatically in size. Some agencies found that locally storing data was sufficient for their purposes, while others found they needed to set up complex data transfer and storage architecture, employing sending data over 4G cellular service.” Legal and regulatory considerations on the use of UAS are also evolving with the Federal Aviation Administration (FAA) and state legislation emerging. The National Conference of State Legislatures published in April 2020 a listing of UAS laws.

2.5.3 Other Emerging Data

Transportation agencies can access a host of other emerging data to support operational decisions. In 2019, Georgia DOT completed a test for transforming a single fiber within a roadside fiber-optic cable into an array of distributed sensors to approximate travel speed, queue, congestion, journey time, vehicle count, and flow using acoustic sensing technology. A preliminary test along Interstate 20 in Atlanta, Georgia, indicates that their vendor solution delivered “accurate and timely traffic flow information using an existing roadside fiber as a traffic sensor.”

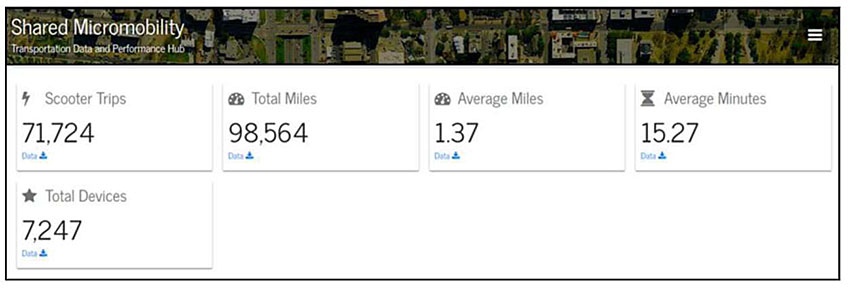

Some cities can access data related to transportation network companies (TNC) trips, to support policy and investment decisions, particularly as they affect changes in movement and transit use. These data are often truncated to manage PII and generally are a challenge to access, given the for-profit competitive advantage concerns among services such as Uber, Lyft, and Via. Similarly, micromobility data may be available to agencies to support operations and planning functions related to equity, safety, usage, and environmental effects from shared scooters, bicycles, and other shared mobility solutions. The data can range from demographics, temporal and spatial usage, and trip lengths to durations and accessibility. Often data are rounded, for example, trip start and end time rounding to nearest 15 minutes, or fares rounded to the nearest $2.50. Other data examples may include injury-specific data, parking sufficiency, or state of repair. The City of Austin collects and shares aggregate scooter and bicycle mobility data publicly, as illustrated in Figure 21.

Figure 21. City of Austin Shared Mobility Dashboard. Source: https://data.austintexas.gov/stories/s/Mobility-Dashboard/gzb5-ykym/.

Return to top ↑

3. Techniques and Tools to Transform Data and Inform Decisions

The previous section outlined the broad sources and types of data available to traffic operations to support real-time and strategic transportation decisions. This section first introduces performance management and how it guides the selection and use of data and analytics.

Next, this section delves into the broad classes of analytics available to transform data into information for real-time and strategic decision making. Most transportation professionals are familiar with the concept of capability maturity models (CMM). The FHWA provides CMM frameworks for most aspects of operation programs, through the lens of Transportation Systems Management & Operations (TSMO). In the same vein, there are many models for data and analytics capability maturity. One such example is the TDWI Analytics Maturity Model that describes an agency’s overall analytics maturity across organizational, resources, data infrastructure, analytics, and governance.

This section closes with a discussion on the importance of understanding the nature and quality of data before using it to derive performance measures, and especially before using it to inform decisions.

3.1 Performance Management

Performance management is the process of ensuring activities and outputs meet an organization’s goals in an effective and efficient manner. NCHRP Report 660, Transportation Performance Management: Insights from Practitioners, notes that “performance management provides a framework that can help transportation agencies set realistic goals, focus on the most important challenges, and improve efficiency.” Without a performance management framework, agencies can quickly become overwhelmed with data, complex analytics, and too many performance measures that may collectively even be conflicting in nature.

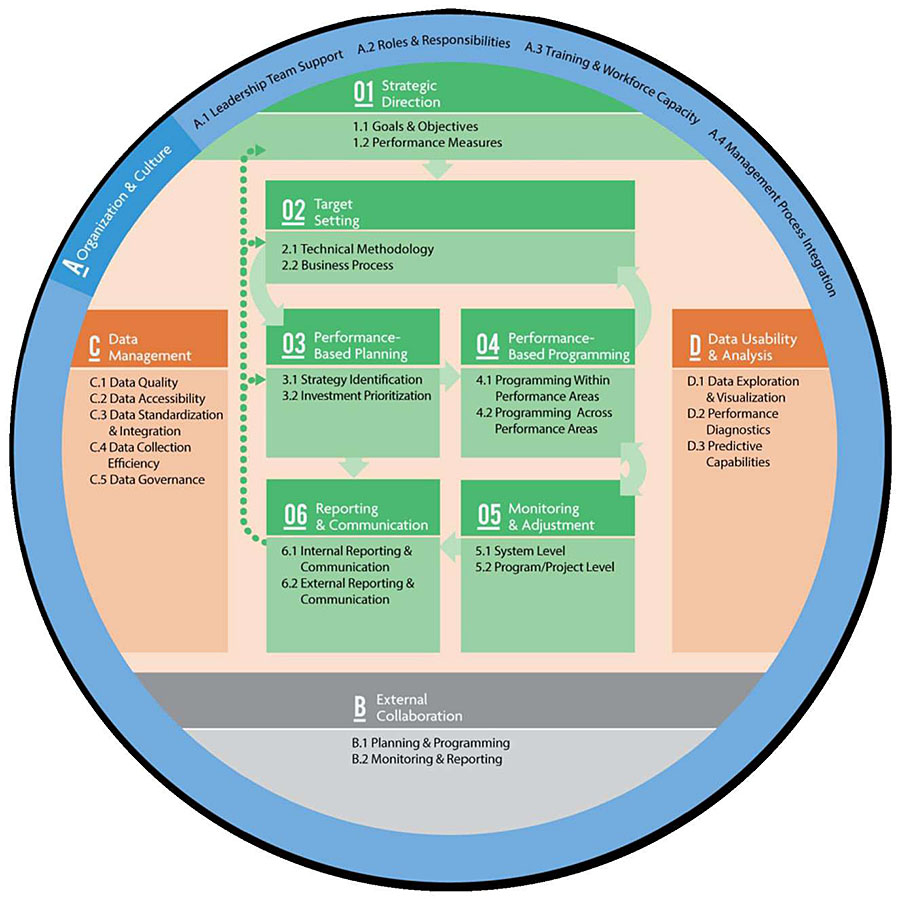

The Federal Highway Administration provides detailed guidance, case studies, and training in defining goals, objectives, and performance measures. Figure 22 illustrates the FHWA transportation performance management (TPM) framework, which outlines the six numbered components and four-lettered components. For more information, the TPM Guidebook offers a chapter for each of the ten components and relationships between components. The TPM Self-Assessment and Resources Library help agencies, and programs within agencies, define performance goals and measures.

Figure 22. Federal Highway Administration Transportation Performance Management Framework (Federal Highway Administration n.d.)

The FHWA also requires that state DOT and MPOs baseline and set targets for certain transportation performance measures related to pavements, assets, operations, freight, and congestion mitigation, and air quality (CMAQ). These are based on the 2012 Moving Ahead for Progress in the 21st Century Act (MAP-21), which established a national highway performance program (NHPP) with seven federal aid highway program goals related to safety, infrastructure, congestion, system reliability, freight movement, environment, and project delivery. The performance measures also reflect the 2015 Fixing America’s Surface Transportation (FAST) Act, which shortened the time frame for state and MPO progress towards meeting NHPP performance targets, and required agencies that failed to make significant progress towards their freight performance targets to include in their next performance report the actions it will take to achieve the targets. The FHWA published the third and final rule for the FHWA National Performance Management Measures in 2017, with key implementation timeline updates in 2018.

State DOTs and MPOs are required to establish baseline and targets for each prescribed performance measure. For example, one required performance measure, level of travel time reliability, is defined as the ratio of the 80th percentile travel time of a reporting segment to a normal travel time (50th percentile), and requires 2-year targets for the Interstate system and four-year targets for non-interstate roadways.

Data from ITS Infrastructure and related sources is central to national, state, and local performance measures, not only for real-time and strategic transportation systems management and operations (TSMO), but also for broader asset management, investment planning, and workforce planning. For example, data specific to traffic counts and percentage of traffic by weight class often feed pavement management systems tools to predict pavement state of repair and estimate lifecycle. Data related to origin-destination and travel paths are used by transportation planners for calibrating and validating models for future demand and network performance. Data on the specific forthcoming severity of weather events can affect staffing decisions.

Forward-thinking, agencies map their mission to goals, their goals to objectives, and performance measures and targets to each objective. Ohio DOT, for example, defined 20 performance measures across fifteen objectives and four goals (safety, reliability, efficiency, and coordination) for their TSMO program, as illustrated in Figure 23.

They use performance measures such as travel time, volume, crash frequency, and incident clearance times to ranks corridors for improvements, using their Traffic Operations Assessment Systems Tool (TOAST) to support investment decision making.

| Level |

Program Objectives |

Performance Measures |

| Program Measures |

Reduce secondary crashes caused by traffic incidents |

Percentage of secondary crashes to primary crashes on monitored freeways |

| Reduce work zone related crashes |

Frequency of work zone crashes |

| Reduce roadside “struck by” incidents |

Frequency |

| Maximize free flow travel time on Ohio’s freeway system |

Percent of time motorists experience free flow travel time (TTRI) |

| Increase resilience of the transportation system to winter weather events |

Percent of routes that recover speeds within 10 MPH of the expected speeds within 2 hours of a snow event ending |

| Reduce Incident clearance |

Duration |

| Reduce Roadway clearance |

Duration |

| Optimize signalized corridors |

Percentage of corridors retimed per year |

| Reduce work zone traffic delays |

Number of hours the operating speed is less than 35 MPH per monitored work zone |

| Provide consistent incident response and management across the state |

Percentage of TIM trained emergency responders in state (DOT/Public Works, Fire, Police, Towing, EMS) |

| Secondary Measures |

Optimize signalized corridors |

Percent arrival on green |

| Optimize travel time reliability on major freight corridors |

Percent of time freight operators experience free flow travel time {TTRI) |

| Monitoring Measures |

Maximize Equipment and communications reliability |

Percent asset uptime |

| Communications network up time |

| Percent asset beyond service life |

| Respond to and clear heavy vehicle incidents as quickly as possible |

Response < 45 minutes; Clearance < 90 minutes |

| Expand TMC surveillance and management capabilities |

Percent of congested corridors (based on TTRI and LOS analysis) with fixed ITS |

| Number of mobile data collection devices (AVL/GPS, cameras, weather sensors) |

| Manage TMC staff workload |

Number of incidents logged |

| Provide timely, accurate, and comprehensive information to customers |

Increase OHGO impact - average number of notifications opened per incident |

| OHGO usage - number of personalized routes created |

| Incident verification (incident occurrence to time public is notified) |

| Hold after action review (AAR) meetings for 100% of incidents that exceed clearance goals |

Percentage of meetings that occur within 30 days |

| Monitor key transportation assets/events to prevent harmful acts |

Number of assets/events monitored |

| Promote TSMO tools to improve Emergency Management |

Number of agencies with CCTV and ODOT Data Access |

Figure 23. Ohio DOT TSMO Performance Measures. Source: Ohio DOT, 2017.

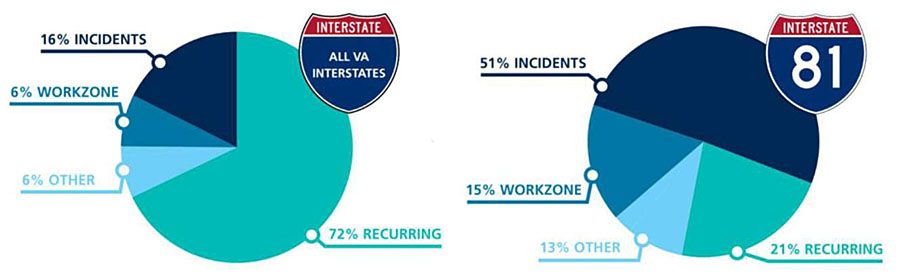

Virginia DOT developed the SmartScale process for scoring and selecting transportation projects based on six factors (safety, congestion mitigation, accessibility, environmental quality, economic development, and land use), many of which are measured using data from ITS and related data (e.g., person-hours of delay and travel time reliability). The metrics an agency or a program chooses must promote the performance it wants. Usually, it can achieve this only by incorporating several metrics into a balanced scorecard, similar to the Ohio and Virginia examples.